Databricks—the Unified Analytics Platform built on the foundation of open-source giants—opens up a collaborative playground for data teams to come together, turning raw data into powerful insights. Its toolkit ranges from ETL to machine learning, making joint data exploration, modeling, and bringing ideas to life a breeze, all within one single platform. As its adoption skyrockets, understanding its pricing model is crucial. Databricks pricing model follows a pay-as-you-go approach, charging users only for the resources they use, with Databricks Units (DBUs) at the core representing computational usage.

In this article, we will cover how the Databricks pricing model works, including key concepts like what Databricks DBU cost (Databricks Units) are and the factors affecting the Databricks cost, Databricks pricing challenges and a whole lot more!!

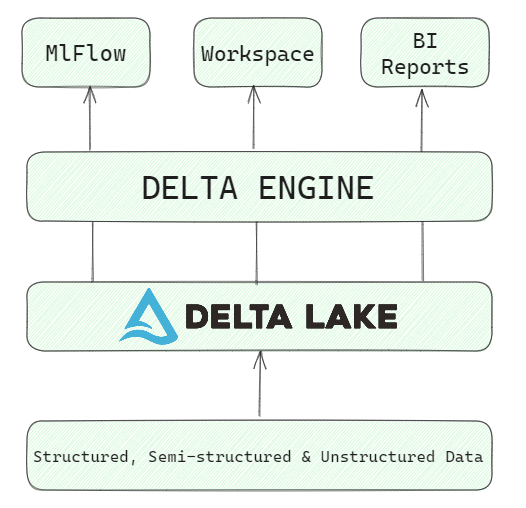

Databricks Lakehouse Vision—Understanding Databricks Architecture

Before we dive into the core Databricks pricing, let's take a moment to understand its architecture first.

Databricks provides a unified data analytics platform built on top of Apache Spark to enable data engineering, data science, machine learning, and analytics workloads. The core components of the Databricks architecture include:

- Delta Lake: Delta Lake acts as an optimized storage layer that brings reliability to data lakes. It provides ACID transactions, scalable metadata handling, and unified streaming/batch processing. Delta Lake extends Parquet data files with a transaction log to provide ACID capabilities on top of cloud object stores like S3. Metadata operations are made highly scalable through log-structured metadata handling. Delta Lake is fully compatible with Apache Spark APIs. It tightly integrates with Structured Streaming to enable using the same data copy for both batch and streaming workloads.

- Delta Engine: Delta Engine is an optimized query engine designed for efficient SQL query execution on data stored in Delta Lake. It uses techniques like caching, indexing, and query optimization to enable fast data retrieval and analysis on large data sets.

- Built-in Tools: Databricks includes ready-to-use tools for data engineering, data science, business intelligence, and machine learning operations. These tools integrate with Delta Lake and Delta Engine to provide a comprehensive environment for working with data.

- Unified Workspace: All the above components are accessed through a single workspace user interface hosted on the cloud, which allows data engineers, data scientists, and business analysts to collaborate on the same data in one platform.

Save up to 50% on your Databricks spend in a few minutes!

Databricks Pricing Model Explained—What Drives Your Databricks Costs?

Databricks pricing model is based on a pay-as-you-go model where users are charged only for what they use based on usage. The core billing unit is the Databricks Unit (DBU) which represents the computational resources used to run workloads. DBU usage is measured based on factors like cluster size, runtime, and features enabled.

What is a DBU in Databricks?

Databricks Units or DBUs encapsulate the total use of compute resources like CPU, memory, and I/O to run workloads on Databricks.

To calculate overall cost:

Databricks DBU consumed x Databricks DBU Rate = Total CostDatabricks DBU cost vary based on the following six key factors:

- Cloud Provider: Databricks offers different Databricks DBU cost depending on the cloud provider (AWS, Azure, or GCP) where the Databricks workspace is deployed.

- Region: The cloud region where the Databricks workspace is located can influence the Databricks DBU cost.

- Databricks Edition: Databricks offers tiered editions (Standard, Premium, and Enterprise) with varying Databricks DBU cost. Enterprise edition offers access to advanced features and typically has the highest Databricks DBU cost, followed by Premium and Standard.

- Instance Type: The instance type (e.g., memory-optimized, compute-optimized, etc.) used for the Databricks cluster affects the Databricks DBU cost.

- Compute Type: Databricks further categorizes DBU pricing by the type of compute workload, including Serverless compute. Each compute type is assigned different Databricks DBU costs to reflect the varying resource demands of these tasks.

- Committed Use: Users can secure discounts on Databricks DBU costs by entering into committed use contracts. These contracts involve reserving a specific amount of capacity for a predetermined period, with the discount increasing proportionally to the amount of capacity reserved. This option is particularly beneficial for predictable, long-term workloads.

TL;DR; Databricks pricing for DBUs varies by workload type, cloud provider and region. In addition to DBUs, you pay the cloud provider directly for associated resources like VMs, storage and networking.

Databricks provides fine-grained Databricks pricing aligned to how different users leverage the Lakehouse platform. Data engineers, data analysts, data scientists, and business analysts can optimize Databricks costs based on their specific use cases.

This consumption-based approach eliminates heavy upfront Databricks costs and provides the flexibility to scale up and down based on dynamic business requirements. Users can only pay for what they use with the Databricks pricing model.

Key Databricks Products—How Does Databricks Pricing Work?

Databricks provides multiple products on its Lakehouse Platform for different data workloads. Each product has usage measured in Databricks DBUs consumed, which when multiplied by the Databricks DBU cost provides the final Databricks cost.

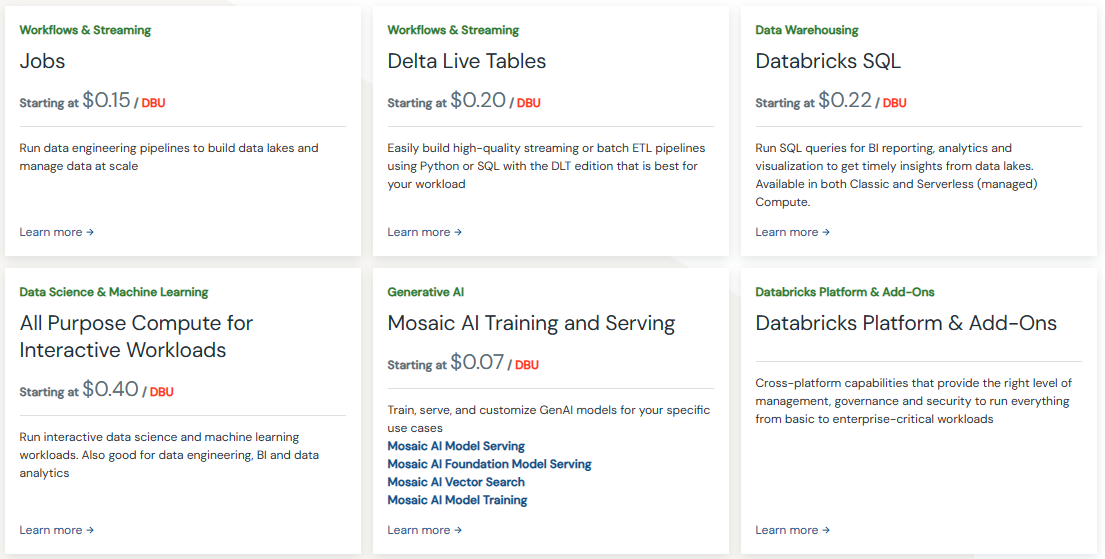

Here is a breakdown of major Databricks products and how they are priced:

Before we dive into Databricks' pricing models, let's first get a good grasp on what their free trial has to offer. After all, it's always nice to test drive something before making any commitments!

What Does the Databricks Free Trial Provide?

Databricks offers a 14-day free trial for users to experience the capabilities of its comprehensive platform. The trial provides access to the complete Databricks platform, including its data engineering, data science, and machine learning tools, giving users a hands-on experience. Available on AWS, Azure, and Google Cloud, the trial allows you to:

- Ingest data from hundreds of sources

- Build data pipelines using a simple declarative approach

- Access instant, elastic compute resources

- Explore Databricks SQL and Delta Lake

During the trial, you can fully utilize the Databricks platform to create production-quality generative AI applications while ensuring output correctness, current results, and corporate context awareness.

Note: The Databricks trial is free, but your cloud provider may charge you for any resources used during the trial period.

Now that we've got a solid grasp on the Databricks free trial, let's take a closer look at their pricing model and see how it all works.

1. Databricks Pricing —Jobs (Starting at $0.15 / DBU)

Databricks Jobs provide a fully managed platform for running production ETL Databricks workflows at scale. Jobs auto-scale clusters up and down to match data processing needs. Databricks Jobs lets you easily ingest and transform batch and streaming data on the Databricks Lakehouse Platform using optimized Databricks clusters that are auto-scaled based on workload.

Key capabilities and benefits of Databricks Jobs include:

- Auto-scaling clusters to match workload needs—Jobs can automatically spin up and down clusters to provide exactly the right amount of compute resources needed to process each job. This optimize costs by not having idle resources.

- Support for different cluster types like Standard, High Concurrency and Optimized—Jobs can use various cluster types tuned for specific workloads like data engineering, data science and analytics.

- Integration with Delta Lake for reliability—Jobs natively integrates with Delta Lake for data pipeline reliability, data quality and lineage.

- Scheduling recurrence and dependencies—Jobs makes it easy to schedule data pipelines on fixed intervals, set start and end times, and configure pipeline dependencies.

- Integration with monitoring tools—Jobs provides metrics and integrates with tools like Grafana for monitoring workloads. Alerts can be set up to detect issues.

Databricks offers two main pricing models for running jobs on their platform: Classic/Classic Photon clusters and Serverless (Preview).

➤ Classic/Classic Photon Clusters

This is a massively parallelized, high-performance version to run data engineering pipelines, build data lakes, and manage data at scale. The pricing for Classic/Classic Photon clusters is based on Databricks Units (DBUs).

Cost per DBU varies based on the chosen plan (Standard, Premium, or Enterprise) and the cloud provider (AWS, Azure, or Google Cloud).

For example,

AWS Databricks Pricing (AP Mumbai region):

- Premium plan: $0.15 per DBU

- Enterprise plan: $0.20 per DBU

Azure Databricks Pricing (US East region):

- Standard plan: $0.15 per DBU

- Premium plan: $0.30 per DBU

GCP Databricks Pricing:

- Premium plan: $0.15 per DBU

➤ Serverless (Preview):

This is a fully managed, elastic serverless platform to run jobs. The pricing for Serverless includes the underlying compute costs and is also based on DBUs.

There is currently a limited-time promotion starting in May 2024 (You can get upto 50% discount).

AWS Databricks Pricing:

- Premium plan: $0.37 per DBU (discounted from $0.74 per DBU)

- Enterprise plan: $0.47 per DBU (discounted from $0.94 per DBU)

Azure Databricks Pricing (US East region):

- Premium plan: $0.45 per DBU (discounted from $0.90 per DBU)

TL;DR:

| Classic/Classic Photon Clusters | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing(US East region) | GCP Databricks Pricing | |

| Standard plan | - | $0.15 per DBU | - |

| Premium plan | $0.15 per DBU | $0.30 per DBU | $0.15 per DBU |

| Enterprise plan | $0.20 per DBU | - | - |

| Serverless (Preview) | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing (US East region) | GCP Databricks Pricing | |

| Premium plan | $0.37 per DBU (discounted from $0.74 per DBU) | $0.45 per DBU (discounted from $0.90 per DBU) | - |

| Enterprise plan | $0.47 per DBU (discounted from $0.94 per DBU) | - | - |

2. Databricks Pricing — Delta Live Tables (Starting at $0.20 / DBU)

Delta Live Tables makes building reliable, scalable data pipelines using SQL or Python easy via auto-scaling Apache Spark. It streams data continuously from sources into Databricks.

Delta Live Tables consumes Databricks DBU from Jobs Compute for running streaming and batch data pipelines. The base Databricks pricing rate starts at $0.20 per Databricks DBU based on the Standard edition.

Advanced features like pipeline branching, debugging and autoloader are available in higher editions and have correspondingly higher DBU cost.

Databricks offers three tiers of Delta Live Tables (DLT) pricing: DLT Core, DLT Pro, and DLT Advanced. The pricing varies based on the chosen cloud provider (AWS, Azure, or Google Cloud Platform) and the plan (Premium or Enterprise).

AWS Databricks Pricing (AP Mumbai region):

Premium plan:

- DLT Core: $0.20 per DBU (Databricks Unit)

- DLT Pro: $0.25 per DBU

- DLT Advanced: $0.36 per DBU

Enterprise plan:

- DLT Core: $0.20 per DBU

- DLT Pro: $0.25 per DBU

- DLT Advanced: $0.36 per DBU

Azure Databricks Pricing:

- Premium Plan (Only plan available):

- DLT Core: $0.30 per DBU

- DLT Pro: $0.38 per DBU

- DLT Advanced: $0.54 per DBU

GCP Databricks Pricing:

Premium Plan (Only plan available):

- DLT Core: $0.20 per DBU

- DLT Pro: $0.25 per DBU

- DLT Advanced: $0.36 per DBU

Delta Live Table Core: Allows you to easily create scalable streaming or batch pipelines in SQL and Python.

Delta Live Table Pro: In addition to the capabilities of DLT Core, it enables you to handle change data capture (CDC) from any data source.

Delta Live Table Advanced: Expands upon DLT Pro by adding the ability to maximize data credibility with quality expectations and monitoring.

TL;DR:

| Delta Live Table (DLT) Core | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing | GCP Databricks Pricing | |

| Premium plan | $0.20 per DBU | $0.30 per DBU | $0.20 per DBU |

| Enterprise plan | $0.20 per DBU | - | - |

| Delta Live Table (DLT) Pro | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing | GCP Databricks Pricing | |

| Premium plan | $0.25 per DBU | $0.38 per DBU | $0.25 per DBU |

| Enterprise plan | $0.25 per DBU | - | - |

| Delta Live Table (DLT) Advanced | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing | GCP Databricks Pricing | |

| Premium plan | $0.36 per DBU | $0.54 per DBU | $0.36 per DBU |

| Enterprise plan | $0.36 per DBU | - | - |

3. Databricks Pricing — Databricks SQL (Starting at $0.22 / DBU)

Databricks SQL provides lightning fast interactive SQL analytics directly on massive datasets in data lakes. It scales to trillions of rows with ANSI-compliant syntax and BI integration.

Key aspects of Databricks SQL include:

- Massive scale - It leverages the Spark SQL engine to query trillions of rows of data in the lakehouse. No data movement needed.

- High performance - Optimized engine provides blazing fast query performance and sub-second responses on massive datasets.

- ANSI SQL syntax - Standard ANSI SQL makes it easy for anyone familiar with SQL to query. Also supports TSQL syntax.

- BI integrations - Integrates with BI tools like Tableau, Power BI and Looker to visualize and share insights.

- Scalable workloads - Workloads auto-scale by automatically provisioning resources to match query needs.

- Workgroup isolation - SQL Workgroups provide isolated SQL Endpoint clusters for more consistent performance and control.

Databricks offers three main SQL pricing options: SQL Classic, SQL Pro, and SQL Serverless. The pricing varies depending on the chosen cloud provider (AWS, Azure, or Google Cloud Platform) and the plan (Premium or Enterprise).

Databricks SQL makes it cost-effective to query massive datasets directly instead of having to downsample or pre-aggregate data for BI tools.

AWS Databricks Pricing (US East (N. Virginia)):

Premium plan:

- SQL Classic: $0.22 per DBU (Databricks Unit)

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

Enterprise plan:

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

Azure Databricks Pricing (US East (N. Virginia)):

Premium Plan (Only plan available):

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

GCP Databricks Pricing:

Premium Plan (Only plan available):

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.69 per DBU

- SQL Serverless (Preview): $0.88 per DBU (includes cloud instance cost)

SQL Classic: Allows you to run interactive SQL queries for data exploration on a self-managed SQL warehouse.

SQL Pro: Provides better performance and extends the SQL experience on the lakehouse for exploratory SQL, SQL ETL/ELT, data science, and machine learning on a self-managed SQL warehouse.

SQL Serverless: Offers the best performance for high-concurrency BI and extends the SQL experience on the lakehouse for exploratory SQL, SQL ETL/ELT, data science, and machine learning on a fully managed, elastic, serverless SQL warehouse hosted in the customer's Databricks account. The pricing for SQL Serverless includes the underlying cloud instance cost.

TL;DR:

| SQL Classic | |||

| AWS Databricks Pricing (US East (N. Virginia)) | Azure Databricks Pricing (US East (N. Virginia)) | GCP Databricks Pricing | |

| Premium plan | $0.22 per DBU | $0.22 per DBU | $0.22 per DBU |

| Enterprise plan | $0.22 per DBU | - | - |

| SQL Pro | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing | GCP Databricks Pricing | |

| Premium plan | $0.55 per DBU | $0.55 per DBU | $0.69 per DBU |

| Enterprise plan | $0.55 per DBU | - | - |

| SQL Serverless | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing | GCP Databricks Pricing | |

| Premium plan | $0.70 per DBU (includes cloud instance cost) | $0.70 per DBU (includes cloud instance cost) | $0.88 per DBU (includes cloud instance cost) |

| Enterprise plan | $0.70 per DBU (includes cloud instance cost) | - | - |

4. Databricks Pricing — Data Science & ML (Starting at $0.40 / DBU)

Databricks provides a complete platform for data science and machine learning powered by Spark, MLflow and Delta Lake. This enables data teams to collaborate on the full ML lifecycle.

ML workloads run on All Purpose clusters with Databricks DBU cost starts at $0.40 per DBU on AWS under the Standard plan. ML Compute optimized clusters, GPUs and advanced MLOps capabilities come at higher Databricks pricing points.

Databricks offers pricing options for running data science and machine learning workloads, which vary based on the cloud provider (AWS, Azure, or Google Cloud Platform) and the chosen plan (Standard, Premium, or Enterprise).

AWS Databricks Pricing (AP Mumbai region):

Premium plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

- Serverless (Preview): $0.75 per DBU (includes underlying compute costs; 30% discount applies starting May 2024)

Enterprise plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.65 per DBU

- Serverless (Preview): $0.95 per DBU (includes underlying compute costs; 30% discount applies starting May 2024)

Azure Databricks Pricing (US East region):

Standard Plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.40 per DBU

Premium Plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

- Serverless (Preview): $0.95 per DBU (includes underlying compute costs; 30% discount applies starting May 2024)

GCP Databricks Pricing:

- Premium Plan (Only plan available):

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

Classic All-Purpose/Classic All-Purpose Photon clusters: These are self-managed clusters optimized for running interactive workloads.

Serverless (Preview): This is a fully managed, elastic serverless platform for running interactive workloads.

TL;DR:

| Classic All-Purpose/Classic All-Purpose Photon clusters | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing (US East region) | GCP Databricks Pricing | |

| Standard plan | - | $0.40 per DBU | - |

| Premium plan | $0.55 per DBU | $0.55 per DBU | $0.55 per DBU |

| Enterprise plan | $0.65 per DBU | - | - |

| Serverless (Preview) | |||

| AWS Databricks Pricing (AP Mumbai region) | Azure Databricks Pricing (US East region) | GCP Databricks Pricing | |

| Premium plan | $0.75 per DBU (includes underlying compute costs; 30% discount applies starting May 2024) | $0.95 per DBU (includes underlying compute costs; 30% discount applies starting May 2024) | - |

| Enterprise plan | $0.95 per DBU (includes underlying compute costs; 30% discount applies starting May 2024) | - | - |

5. Databricks Pricing — Model Serving Pricing (Starting at $0.07 / DBU)

In addition to training ML models, Databricks enables directly deploying models for low latency and auto-scaling inference via its serverless offering.This allows data teams to integrate ML models with applications, leverage auto-scaling and only pay for what they use.

Databricks offers model serving and feature serving capabilities with pricing that varies based on the cloud provider (AWS, Azure, or Google Cloud Platform) and the chosen plan (Premium or Enterprise).

Key aspects of Databricks Serverless Inference include:

- Real-time predictions—Achieve sub-second latency for model predictions on new data.

- Auto-scaling—Serverless automatically scales up and down to handle usage spikes and troughs.

- Pay-per-use pricing—Only pay for predictions served, not idle model instances.

- Integrates with MLflow—Deploy models tracked in MLflow Model Registry.

- Multiple frameworks—Supports major frameworks like TensorFlow, PyTorch, and scikit-learn.

- Monitoring—Detailed metrics on prediction throughput, data drift, costs and more.

AWS Databricks Pricing (US East (N. Virginia)):

Premium plan:

- Model Serving and Feature Serving: $0.070 per DBU (Databricks Unit), includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

Enterprise plan:

- Model Serving and Feature Serving: $0.07 per DBU, includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

Azure Databricks Pricing (US East region):

Premium Plan(Only plan available):

- Model Serving and Feature Serving: $0.07 per DBU, includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

GCP Databricks Pricing:

Premium Plan (Only plan available):

- Model Serving and Feature Serving: $0.088 per DBU, includes cloud instance cost

Model Serving: Allows you to serve any model with high throughput, low latency, and autoscaling. The pricing is based on concurrent requests.

Feature Serving: Enables you to serve features and functions with low latency. The pricing is also based on concurrent requests.

GPU Model Serving: Provides GPU-accelerated compute for lower latency and higher throughput on production applications. The pricing is based on GPU instances per hour.

TL;DR:

| Model Serving and Feature Serving | |||

| AWS Databricks Pricing (US East (N. Virginia)) | Azure Databricks Pricing (US East region) | GCP Databricks Pricing | |

| Premium plan | $0.070 per DBU; includes cloud instance cost | $0.07 per DBU; includes cloud instance cost | $0.088 per DBU; includes cloud instance cost |

| Enterprise plan | $0.07 per DBU; includes cloud instance cost | - | - |

| GPU Model Serving | |||

| AWS Databricks Pricing (US East (N. Virginia)) | Azure Databricks Pricing (US East region) | GCP Databricks Pricing | |

| Premium plan | $0.07 per DBU; includes cloud instance cost | $0.07 per DBU; includes cloud instance cost | - |

| Enterprise plan | $0.07 per DBU; includes cloud instance cost | - | - |

Check out the Databricks pricing page for in-depth information on the following Mosaic AI services:

Databricks Costs — Key Factors Affecting It

While DBUs provide a standardized usage measure, total DBUs depend on data and workload specifics. The key factors that drive DBU usage are:

- Data Volume: Higher volumes require more processing, increasing DBUs.

- Data Complexity: More complex data and algorithms lead to higher DBU consumption.

- Data Velocity: For streaming, higher throughput increases DBU usage.

Now that we've covered most of the Databricks cost and pricing aspects, it's worth mentioning that there's actually a tool for calculating Databricks cost—Databricks pricing calculator.

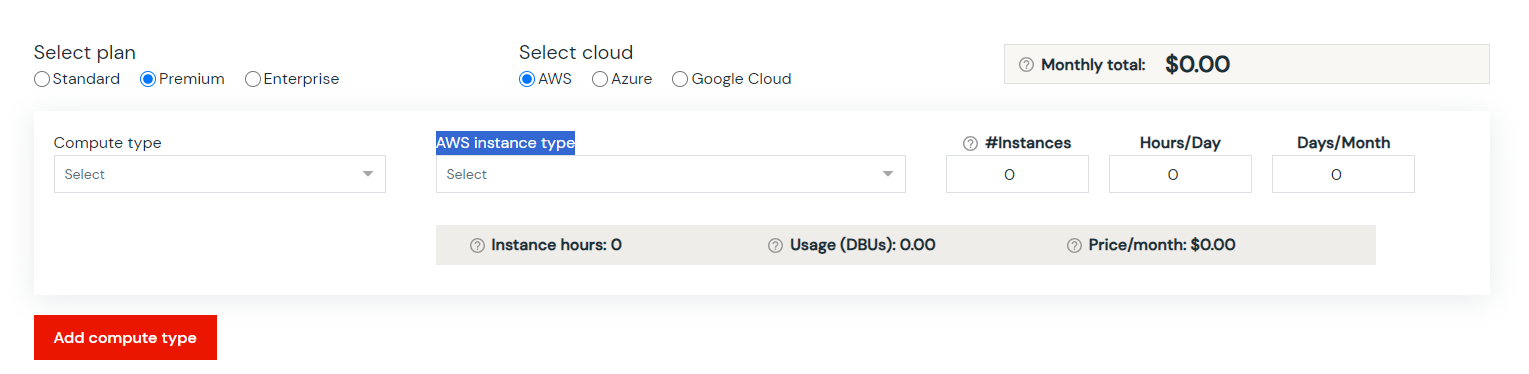

Using DBU Calculator to Estimate Databricks Costs

To simplify estimating Databricks costs Databricks provides the Databricks DBU Calculator which is a very handy tool. It lets you model hypothetical workloads based on parameters like:

- Databricks Editions: (Standard, Premium and Enterprises)

- Compute type

- AWS instance type

- Cloud platform and region

You can tweak these parameters to reflect your actual data and pipelines. The Databricks calculator will help you estimate the:

- Databricks DBUs likely to be consumed by the workload

- Applicable Databricks DBU cost based on choices

- Total daily + monthly cost

This provides a projected Databricks spend based on your unique situation. The calculator also lets you experiment with different scenarios and see how the Databricks pricing changes. So by modeling your existing and projected future workloads through the DBU Calculator, you can arrive at a reasonable estimate of your overall Databricks costs.

Databricks Pricing and Billing Challenges

While Databricks provides extensive flexibility, some key Databricks pricing and billing challenges to note are:

- Manual Billing Data Integration: Integrating Databricks costs into overall cloud spend reporting requires substantial amounts of manual effort without automated processes. This is time-consuming and riskier for Databricks billing accuracy.

- Double charges: Databricks has two main charges—licensing and infrastructure costs. This duality makes assessing total Databricks costs extremely difficult.

- Lack of Spending Controls: Databricks lacks robust spending limit controls or cost alerting to prevent unexpected overages and budget overruns.

- Granular Cost Tracking Issues: It is hard to differentiate Databricks costs by specific capabilities like data exploration vs insights. And determining which business units drive Databricks costs is very challenging to figure out.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

Databricks pricing model operates on a pay-as-you-go model where users are charged solely for the resources utilized, which is a transparent and user-friendly approach. The core billing unit is the Databricks Unit (DBU) which represents the computational resources used to run workloads. DBU usage is measured based on factors like cluster size, runtime, and features enabled.

In this article, we covered:

- What is Databricks Unit (DBU) And its impact on overall cost

- How total cost is calculated based on DBU consumption and Databricks DBU costs

- Variable dbu cost across cloud providers, Databricks editions, and compute types.

- What does the Databricks free trial provide?

- How does Databricks pricing work?

- Pricing breakdown for Key Databricks products (Like jobs, Deltalive tables, Databricks SQL, data science & ML, and mosaic AI model serving)

- What are the primary cost drivers affecting DBU consumption?

- Databricks DBU calculator for better cost estimation and budgeting

- Challenges related to Databricks pricing and billing

...and so much more!

At first glance, navigating Databricks pricing might seem daunting at first, given its range of products and cloud options. However, understanding its cost structure, particularly the Databricks Units (DBU) cost, is critical for avoiding surprises and keeping analytical operations within budget.

FAQs

What is Databricks pricing based on?

Databricks uses a pay-as-you-go pricing model based on usage measured in Databricks Units (DBUs). You are charged for the computational resources used to run workloads.

How are Databricks DBUs calculated?

Databricks DBUs encapsulate the total use of compute resources like CPU, memory, and I/O to run workloads. DBU usage depends on factors like cluster size, runtime, and enabled features.

What drives Databricks costs?

The main factors affecting Databricks costs are data volume, data complexity, and data velocity. Higher volumes, more complex processing, and increased throughput all increase DBU usage.

How do you calculate overall Databricks cost?

Total Databricks cost = DBUs consumed x DBU hourly rate. The Databricks DBU cost varies based on cloud provider, region, features enabled.

How can you estimate Databricks costs?

Databricks provides a DBU Calculator tool to model hypothetical workloads and estimate likely DBU usage. This projected DBU usage can be multiplied by Databricks DBU costs to estimate overall costs.

What is the Databricks Community Edition?

It's a free version of Databricks with limited features, suitable for training or limited usage.

What does the Databricks free trial offer?

The free trial provides user-interactive notebooks to work with various tools like Apache Spark and Python, without any charge for Databricks services, though underlying cloud infrastructure charges apply.

What are the key factors that affect DBU cost?

The key factors that affect DBU cost are the cloud provider (AWS, Azure, or GCP), region, Databricks edition (Standard, Premium, or Enterprise), instance type, and compute type.

How are DBU cost billed?

DBUs are billed on a per-second basis, making the pricing flexible based on usage.

What factors affect the number of DBUs consumed?

Factors include the amount of data processed, memory used, vCPU power, region, and the pricing tier selected.

How does Serverless Compute affect Databricks cost?

Serverless Compute simplifies cluster management but the pricing may vary based on the resources consumed.

Are there any commitment-based discounts available?

Yes, committing to a certain amount of usage can earn discounts off the standard rate.

How do Spot Instances contribute to cost-saving?

Spot Instances can provide up to 90% savings on Databricks costs compared to on-demand pricing.

What is Databricks Model Serving?

Databricks Model Serving allows for deploying machine learning models for low-latency and auto-scaling inference, with pay-per-use pricing based on DBUs.

How is pricing affected by the Databricks Compute type chosen?

Pricing varies based on the compute type chosen as each caters to different processing needs and resource consumption.

What additional costs should be considered?

Additional costs may include Enhanced Security & Compliance Add-ons, especially for regulated data processing.

Why are Databricks so expensive?

Databricks pricing can seem expensive because it provides a comprehensive unified analytics platform with advanced capabilities for data engineering, data science, machine learning, and analytics. The costs are driven by factors like:

- Cloud provider

- Region

- Databricks edition (Standard, Premium, Enterprise)

- Instance types,

- Compute types used.

But, the pay-as-you-go model allows you to only pay for the resources you consume.

What is Databricks pricing model?

Databricks follows a pay-as-you-go pricing model where users are charged only for the resources they use, based on a core billing unit called Databricks Units (DBUs) which represent computational usage.

Is Databricks free to use?

No, Databricks is not completely free to use. But, they do offer a 14-day free trial that provides access to the complete Databricks platform for evaluation purposes. After the trial period, users are charged based on their actual usage.

How is Databricks billed?

Databricks billing is based on the number of Databricks Units (DBUs) consumed, multiplied by the DBU cost which varies based on factors like the cloud provider, region, Databricks edition, instance type, and compute type.

Does Databricks have a free plan?

No, Databricks does not offer a permanent free plan. They only provide a 14-day free trial for evaluation purposes.

Is Databricks free on AWS?

No, Databricks is not free to use on AWS. Users have to pay for the Databricks Units (DBUs) consumed on AWS, based on the DBU cost for the specific AWS region and Databricks edition.

Is Databricks owned by Microsoft?

No, Databricks is not owned by Microsoft. It is an independent company that provides its platform on multiple cloud providers like AWS, Azure (Microsoft), and Google Cloud Platform.

What is the Databricks DBU Calculator?

Databricks DBU Calculator is a tool that helps estimate DBUs and costs based on workload parameters like Databricks edition, compute type, instance type, cloud platform, and region.

How does Databricks pricing compare to traditional data analytics platforms?

Databricks claims to offer better price-performance, especially on AWS using Graviton2 instances.

How does the region affect Databricks pricing?

Pricing may vary based on the region due to different operational costs and cloud resource pricing.

What is Delta Lake?

Delta Lake is an optimized storage layer that brings reliability to data lakes, providing ACID transactions, scalable metadata handling, and unified streaming/batch processing.

What is Delta Engine?

Delta Engine is an optimized query engine designed for efficient SQL query execution on data stored in Delta Lake, using techniques like caching, indexing, and query optimization.