Snowflake can handle and analyze large volumes of data. But, like any other cloud data warehouses/dbs, Snowflake is not immune to data duplication. Like any other warehouse and DBs Snowflake does support unique, primary, and foreign keys but it does not enforce these constraints (except for NOT NULL constraints). This means that duplicate rows can be inserted into Snowflake tables, which could lead to certain records being inserted more than once. There are a number of strategies that can be used to mitigate this issue.

In this article, we will cover everything you need to know about identifying and deleting duplicate data in Snowflake. We will first start by defining duplicate data, explain why it's problematic, and discuss methods to detect it. We'll also explore preventive measures and demonstrate how to delete duplicate records while loading CSV data into Snowflake.

Let's dive right in!!

Tackling Duplicate Data—Understanding the Issue

Duplicate data is one of the main data quality issues in data warehouses like Snowflake. Having multiple copies of the same data row reduces data accuracy and reliability. It is important to regularly identify and remove duplicates.

Common causes of Snowflake data duplication:

There are a few common causes of Snowflake data duplication:

1) Snowflake data duplication Cause 1—Human error:

Data duplication can occur due to human mistakes during data entry, such as accidentally entering the same information multiple times or copying and pasting data incorrectly.

2) Snowflake data duplication Cause 2—System glitches or software bugs:

Technical issues within a system or software can sometimes lead to duplicate data creation. It can happen when there are errors in data synchronization or when system processes fail to handle data properly.

3) Snowflake data duplication Cause 3—Data integration or migration processes:

During data integration or migration from one system to another, data duplication can occur if the mapping or transformation rules are not properly defined or if there are inconsistencies in data formats between the source and target systems.

4) Snowflake data duplication Cause 4—System integration:

When importing or bringing data together from multiple sources, duplicates can be created if there is no any kind of matching process. The same entity may exist in both systems but with slightly different details.

5) Snowflake data duplication Cause 5—Lack of constraints:

Primary keys and unique constraints can help prevent duplicates

Note: Snowflake does not enforce these constraints, but if they are not properly implemented, duplicates can emerge.

Impacts of Snowflake data duplication:

The impacts of duplicate Snowflake data are significant:

1) Snowflake data duplication Impact 1— Inaccurate Analysis and Poor Metrics:

Aggregate metrics and KPIs will be inflated and inaccurate with duplicate data. Analysis will also yield incorrect insights.

2) Snowflake data duplication Impact 2—Very poor data quality:

Duplicate data reduces the overall accuracy, completeness and reliability of data which reduces data quality, which can significantly undermine confidence in the data.

3) Snowflake data duplication Impact 3—Operational inefficiencies:

Additional time and resources are required for data deduplication processes, reducing operational efficiency and productivity.

4) Snowflake data duplication Impact 4—Higher costs:

The costs to identify, resolve, and prevent duplicate data can be quite high especially if done manually. These costs diminishing the return on investment (ROI) for data initiatives.

5) Snowflake data duplication Impact 5—Bad decision making:

Inaccurate data and analysis due to data duplication can lead to bad decision making. When businesses make decisions based on incomplete or incorrect data/info, it can have a negative impact on key business outcomes. For example, let’s say a company/business may make a decision to launch a new product based on the assumption that there is a large market fit for it, when in reality the market is much smaller than they thought. This can lead to lost sales + wasted marketing spend and other financial losses.

Save up to 30% on your Snowflake spend in a few minutes!

How to Delete Duplicate Records in Snowflake?

Data integrity is essential for effective analysis in Snowflake. Duplicate records can cause serious problems, resulting in incorrect insights and poor decisions. Luckily, Snowflake offers some useful tools for identifying and removing troublesome duplicates.

Here are some of the key methods available in Snowflake to identify and delete duplicate record:

1) Identify and Delete Duplicate Records—Using Snowflake DISTINCT

One straightforward way to weed out or delete duplicate records in Snowflake is by using the Snowflake DISTINCT keyword alongside the SELECT command to extract only distinct (unique) values from a dataset. So, by only picking columns with the DISTINCT keyword, Snowflake returns only unique records, removing duplicates.

For example;

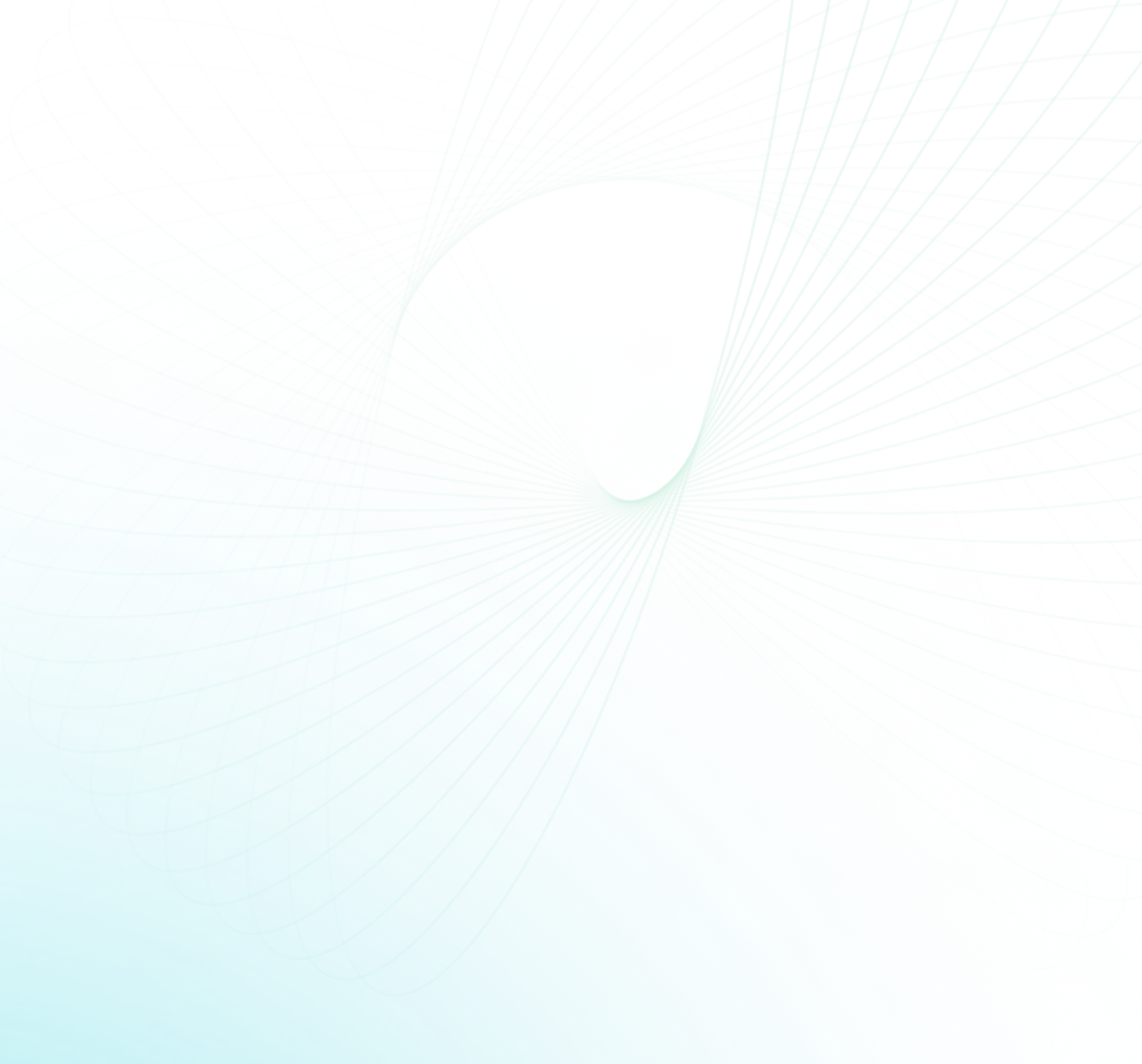

Let's start by creating a sample table and inserting some dummy data for testing purposes.

CREATE OR REPLACE TABLE STUDENT_RECORD (

STUDENT_ID NUMBER(6,0),

FIRST_NAME VARCHAR2(20),

LAST_NAME VARCHAR2(20),

AGE NUMBER(3,0),

ADDRESS VARCHAR2(100),

PHONE_NUMBER VARCHAR2(20),

GRADE VARCHAR2(10)

);

INSERT INTO STUDENT_RECORD(STUDENT_ID, FIRST_NAME, LAST_NAME, AGE, ADDRESS, PHONE_NUMBER, GRADE) VALUES

(1, 'John', 'Cena', 18, '123 Main St, City', '123-456-7890', 'A'),

(1, 'John', 'Cena', 18, '123 Main St, City', '123-456-7890', 'A'),

(2, 'Rock', 'Bottom', 17, '456 Second St, Town', '987-654-3210', 'B'),

(2, 'Rock', 'Bottom', 17, '456 Second St, Town', '987-654-3210', 'B'),

(2, 'Rock', 'Bottom', 17, '456 Second St, Town', '987-654-3210', 'B'),

(3, 'James', 'Johnson', 16, '789 Oak St, Village', '456-123-7890', 'C'),

(3, 'James', 'Johnson', 16, '789 Oak St, Village', '456-123-7890', 'C'),

(4, 'Sarah', 'Williams', 18, '321 Pine St, County', '789-123-4560', 'A');

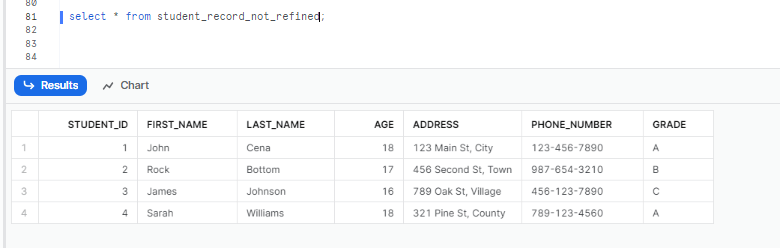

SELECT * FROM STUDENT_RECORD;

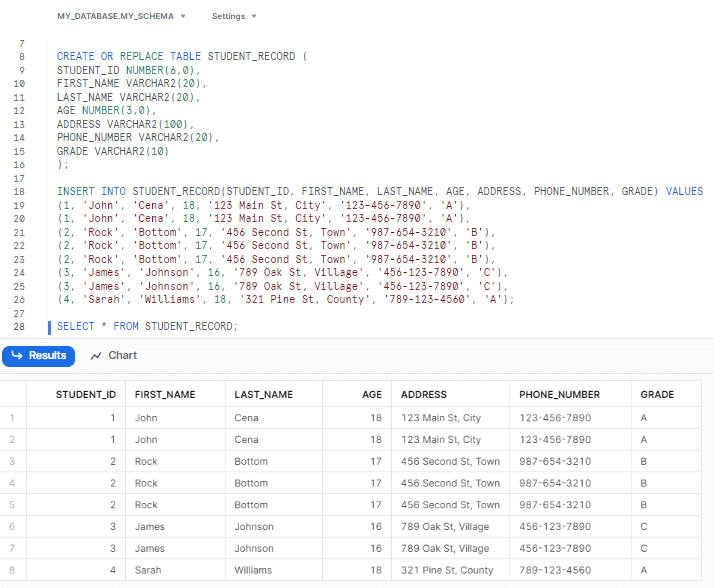

Let’s retrieve unique records from the table. To do so, simply type in the following query.

SELECT DISTINCT * FROM STUDENT_RECORD;

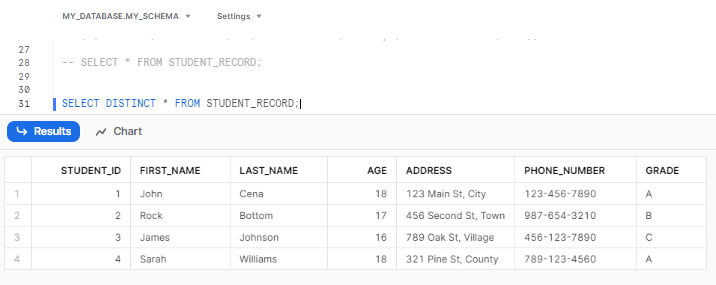

Now, let’s go a bit further and refine the student records and remove any duplicates.

First, let's create a separate table called refined_student_record by selecting distinct records from the student_record table. This will create a new table with unique records.

CREATE OR REPLACE TABLE refined_student_record AS SELECT DISTINCT * FROM student_record;Next, we will rename the original student_record table to student_record_not_refined. This step is optional but can be useful if you want to keep a backup of the original data.

ALTER TABLE student_record RENAME TO student_record_not_refined;Then, we will rename the refined_student_record table to student_record, effectively replacing the original table with the refined version.

ALTER TABLE refined_student_record RENAME TO student_record;Lastly, we can verify the changes by selecting all records from the student_record table, which now contains the refined and deduplicated data.

SELECT * FROM student_record;

2) Identify and Delete Duplicate Records—Using Snowflake ROW NUMBER() function

Snowflake ROW NUMBER function is an analytic function that assigns a unique sequential number to each row within a specified partition of the result set. Using this function, you can assign row numbers to records and then filter for rows with a row number of 1, effectively removing duplicates. This method is useful when you want to eliminate duplicates based on specific column combinations.

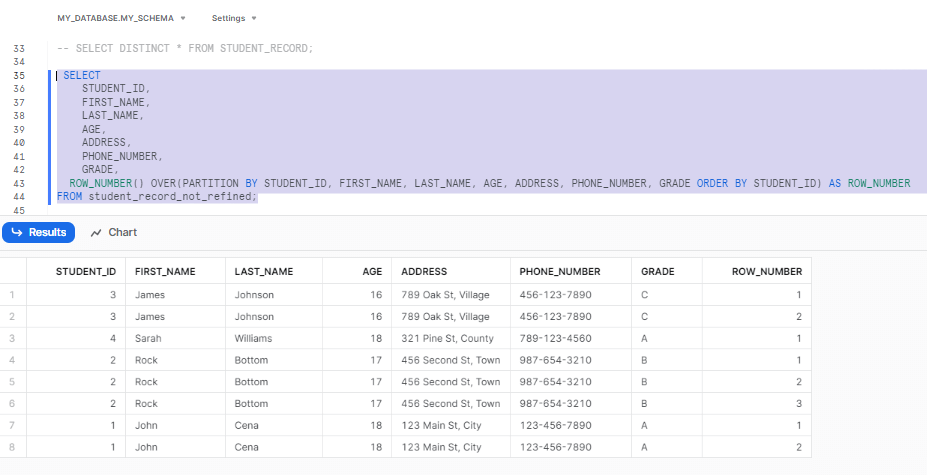

Now, let's write a query utilizes the Snowflake ROW NUMBER() analytic function to assign row numbers to each unique set of records based on the specified columns:

SELECT

STUDENT_ID,

FIRST_NAME,

LAST_NAME,

AGE,

ADDRESS,

PHONE_NUMBER,

GRADE,

ROW_NUMBER() OVER(PARTITION BY STUDENT_ID, FIRST_NAME, LAST_NAME, AGE, ADDRESS, PHONE_NUMBER, GRADE ORDER BY STUDENT_ID) AS ROW_NUMBER

FROM student_record_not_refined;

After assigning row numbers to the records, you can extract the unique records from the table by querying the rows with a row number of 1.

The SQL query below shows how to extract unique records from the student_record_not_refined table using the Snowflake ROW NUMBER analytic function:

SELECT

STUDENT_ID,

FIRST_NAME,

LAST_NAME,

AGE,

ADDRESS,

PHONE_NUMBER,

GRADE

FROM (

SELECT

STUDENT_ID,

FIRST_NAME,

LAST_NAME,

AGE,

ADDRESS,

PHONE_NUMBER,

GRADE,

ROW_NUMBER() OVER (PARTITION BY STUDENT_ID, FIRST_NAME, LAST_NAME, AGE, ADDRESS, PHONE_NUMBER, GRADE ORDER BY STUDENT_ID) AS ROW_NUMBER

FROM student_record_not_refined

) AS subquery

WHERE ROW_NUMBER = 1;As you can see, the inner subquery picks all columns from the student_record_not_refined table and adds the additional column Snowflake ROW NUMBER() OVER (...) AS ROW_NUMBER, which assigns row numbers to each unique set of records depending on the specified columns. The outer query then retrieves the columns STUDENT_ID, FIRST_NAME, LAST_NAME, AGE, ADDRESS, PHONE_NUMBER, and GRADE from the subquery. And finally, the WHERE clause filters the results to only include rows where the ROW_NUMBER column is equal to 1, successfully extracting the unique records.

3) Identify and Delete Duplicate Records—Using Snowflake SWAP WITH command

Snowflake SWAP WITH command in Snowflake allows for the swapping of content and metadata between two specified tables, including integrity constraints, which involves creating a new table with the same structure as the original table, inserting unique records into the new table, and then swapping the data between the two tables. This method is very useful to efficiently delete duplicate records in Snowflake from large datasets.

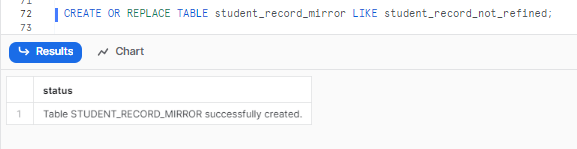

Let’s create a new table with the same structure as the source table.

Use the CREATE TABLE LIKE statement to create the STUDENT_RECORD_MIRROR table with the same structure as the STUDENT_RECORD_NOT_REFINED table, but without any records or data.

CREATE OR REPLACE TABLE student_record_mirror LIKE student_record_not_refined;

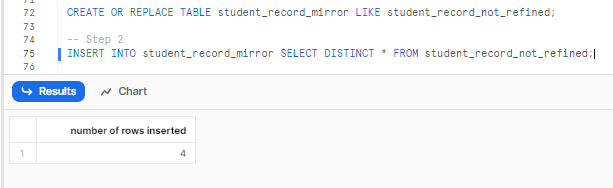

Now, insert unique records into the newly created table. Make sure to use the INSERT INTO statement to select distinct records from the STUDENT_RECORD_NOT_REFINED table and insert them into the STUDENT_RECORD_MIRROR table.

INSERT INTO student_record_mirror SELECT DISTINCT * FROM

student_record_not_refined;

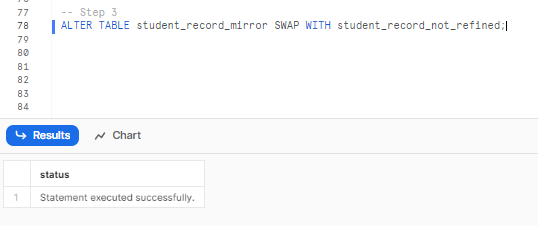

Finally, Swap the data between the two tables. Use the Snowflake SWAP WITH command to swap the data and metadata between the STUDENT_RECORD_NOT_REFINED and STUDENT_RECORD_MIRRORR tables. This operation is performed in a single transaction.

ALTER TABLE student_record_mirror SWAP WITH student_record_not_refined;

select * from student_record_not_refined;

4) Identify and Delete Duplicate Records—Using OVERWRITE command

Alternatively, you can use the Snowflake INSERT statement with the Snowflake OVERWRITE command to insert unique records directly into the student record table, which eliminates the need for creating an intermediate table and swapping data. The existing data in the table will be truncated before inserting the unique records.

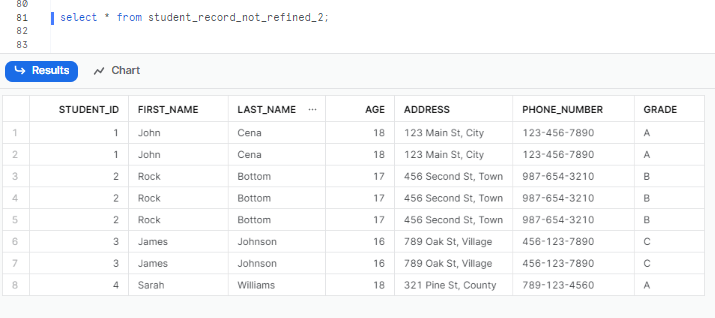

Let’s first test our table has un-refined data or not. To do so, just select all from our unrefined table.

select * from student_record_not_refined_2;

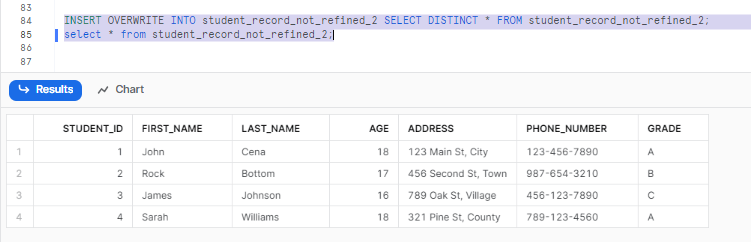

Now, lets overwrite it.

INSERT OVERWRITE INTO student_record_not_refined_2 SELECT DISTINCT * FROM student_record_not_refined_2;

select * from student_record_not_refined_2;

5) Identify and Delete Duplicate Records—Using Third-party tools to check Snowflake data quality

Apart from the built-in features, functionalities and commands provided by Snowflake, there are various third-party Snowflake tools available that specialize in data quality and deduplication. These tools include:

These tools offer exceptional functionalities to efficiently identify and delete duplicate data. So by carefully using these tools, you can benefit from flexibility and customization options tailored to their specific data management needs. These third-party tools provide enhanced capabilities and can boost Snowflake's native capabilities, allowing for comprehensive and efficient duplicate data management.

Beyond the options covered in this article, there are a few more techniques. For those, check out this video.

How Do You Prevent Data Duplication Issues in Snowflake?

So, how do you prevent data duplication issues in Snowflake? Welp!! There are several strategies you can employ, they are:

1) Standardizing data entry:

Establishing guidelines and enforcing consistent data entry formats can minimize the chaos of duplicate entries.

2) Implementing validation rules:

Applying validation rules and constraints during data entry can prevent duplicate records from being created.

3) Implementing unique identifiers:

Assigning unique identifiers to records ensures their uniqueness and helps in identifying and eliminating duplicates.

4) Setting up automated data cleaning processes:

Automating data cleaning processes can significantly reduce the occurrence of duplicate data, which involves implementing data quality tools or scripts that regularly scan and clean the data, identifying and removing duplicates.

5) Importance of regular data audits:

Conducting regular data audits is crucial for identifying and addressing duplicate data. Audits help in detecting duplicate records, understanding the root causes, and implementing necessary measures to prevent their occurrence in the future.

Delete Duplicate Record in Snowflake—Hands-on Example:

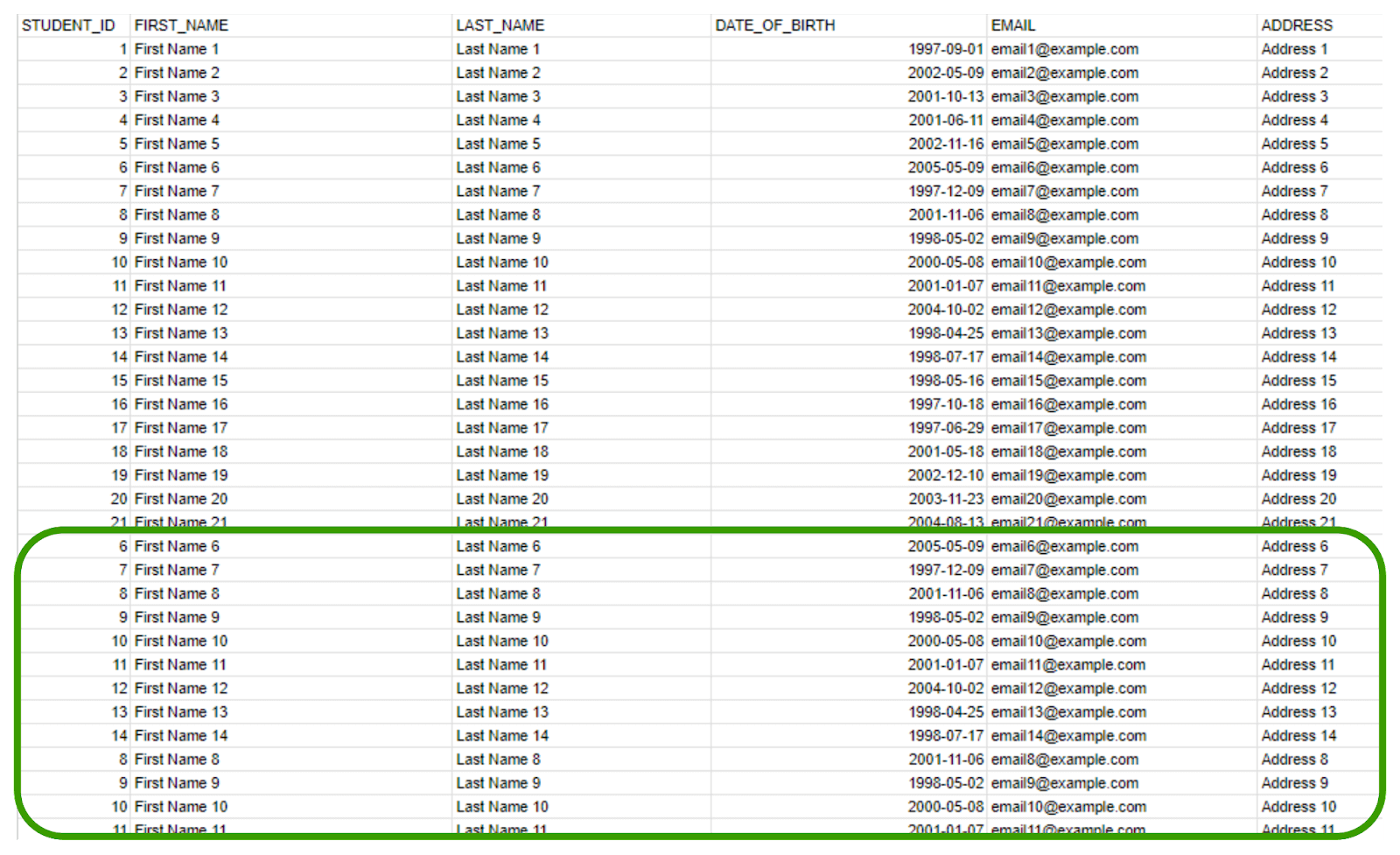

Now, let's explore a hands-on example of loading a demo CSV file that contains duplicate records. We will refine the data before loading it into the table. Follow these steps carefully to proceed:

Here is the sample of what our CSV record looks like:

To begin, we will load our CSV file into Snowflake using Snowsight. There are multiple methods to accomplish this task, such as using a Snowflake external stage or a Snowflake managed stage (internal stage). For this tutorial, we will create a Snowflake managed stage (internal stage) in Snowsight and load the CSV file into it. Follow the steps below:

Here are the steps to follow to upload CSV to Snowflake using internal stage:

Step 1: Log in to Snowsight.

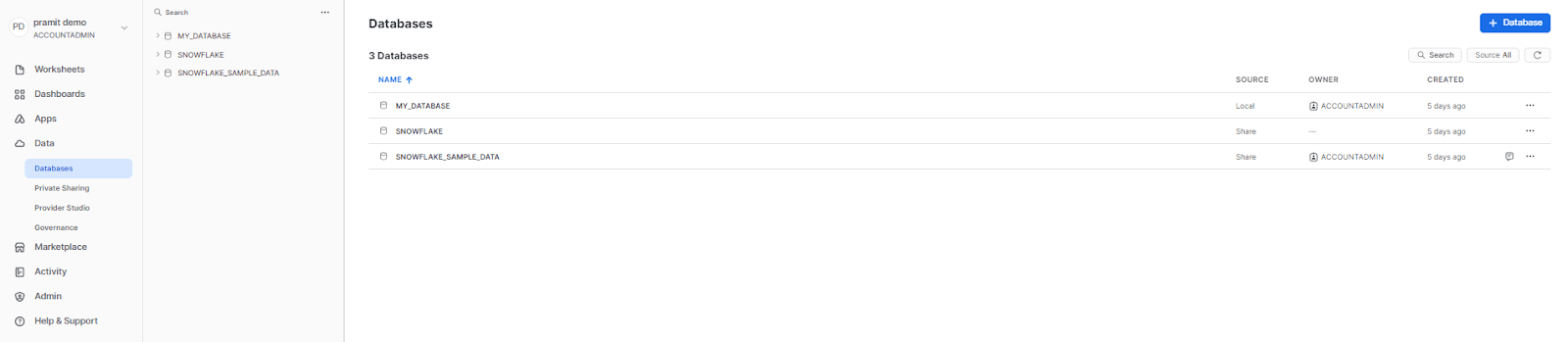

Step 2: Head over to the navigation menu, select "Data" and then click on "Databases", which will display a list of available databases.

Step 3: Select the desired database and schema from the list to set the context for your data loading process.

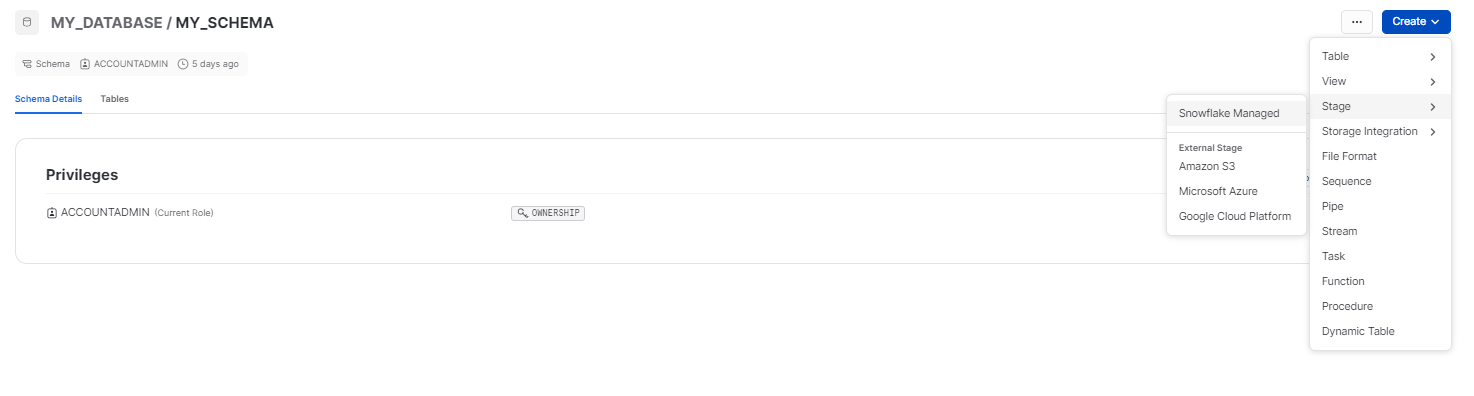

Step 4: Choose the ‘stage’ option and click on “Snowflake Managed” stage.

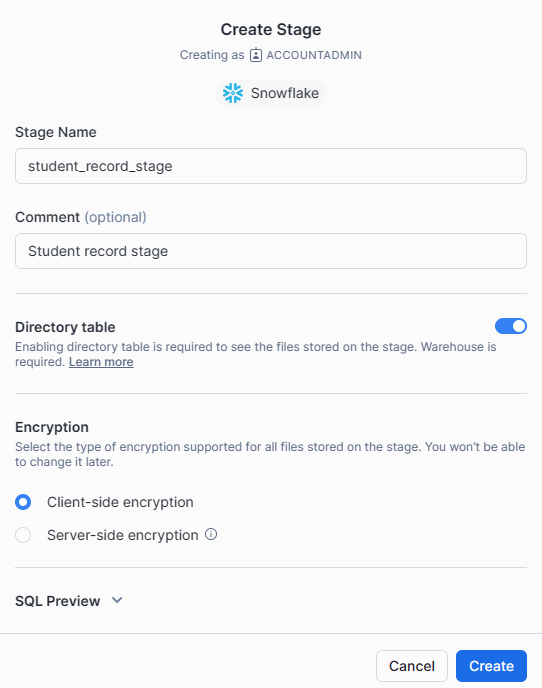

Step 5: Enter a Stage Name. In the "Stage Name" field, provide a descriptive name for your stage. Then, decide whether to enable or disable the directory table. It is important to note that the directory table stores file-level metadata regarding the data files in the stage. Lastly, select your preferred encryption type, either "Client-side" or "Server-side".

Note: Once the stage is created, your chosen encryption type will be permanent and cannot be modified.

Step 6: Click the "Create" button to create the Snowflake Managed stage.

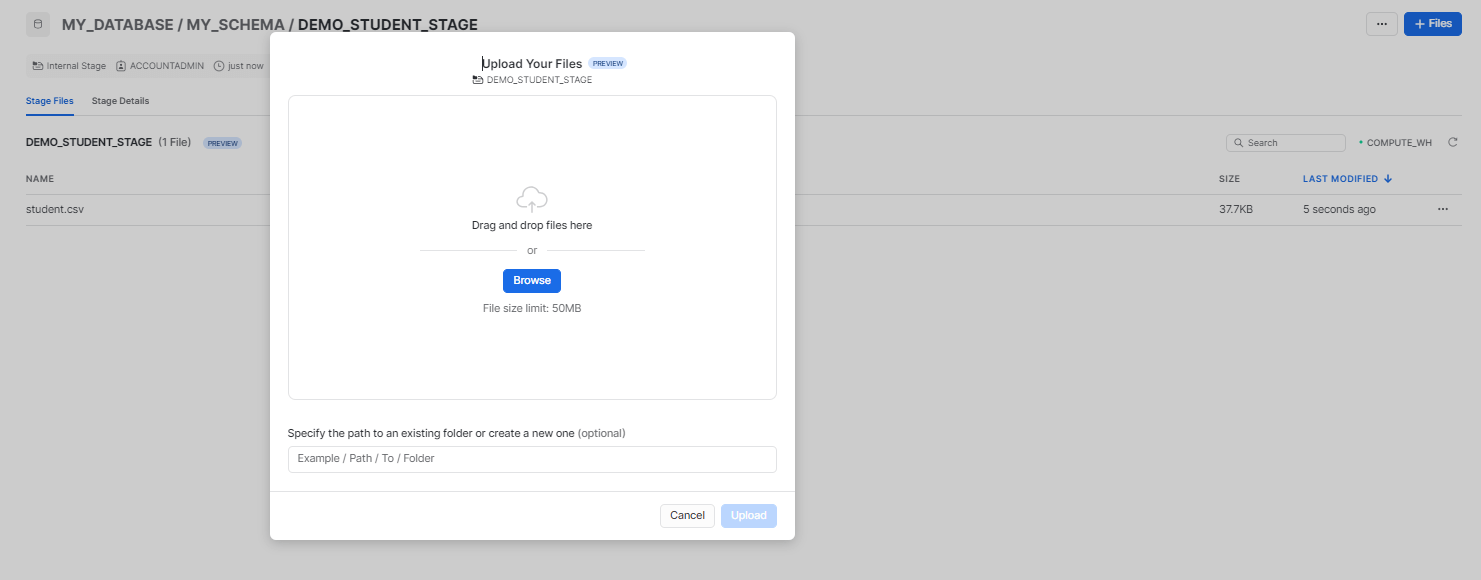

Step 7: Choose the CSV file to load into the Snowflake stage.

Step 8: After successfully uploading the CSV file, navigate to the worksheet and enter the following command to verify if the CSV file has been loaded or not.

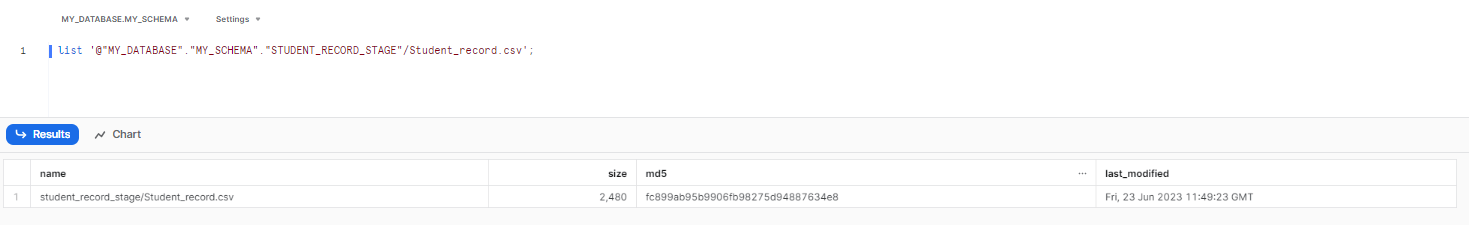

list '@"MY_DATABASE"."MY_SCHEMA"."STUDENT_RECORD_STAGE"/Student_record.csv';

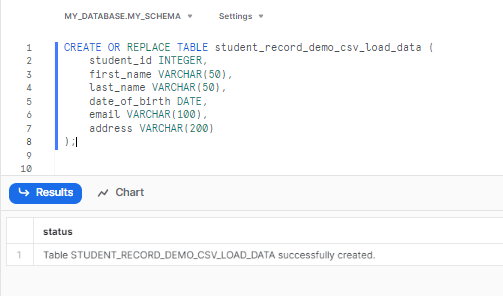

Step 9: Now, let's create a table using the CREATE TABLE statement that matches the structure of your CSV file.

Run the following command to create the table:

CREATE OR REPLACE TABLE student_record_demo_csv_load_data (

student_id INTEGER,

first_name VARCHAR(50),

last_name VARCHAR(50),

date_of_birth DATE,

email VARCHAR(100),

address VARCHAR(200)

);

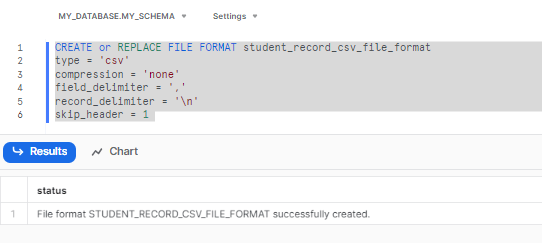

Step 10: Create a FILE FORMAT. Run the following command to create a file format:

CREATE or REPLACE FILE FORMAT student_record_csv_file_format

type = 'csv'

compression = 'none'

field_delimiter = ','

record_delimiter = '\n'

skip_header = 1

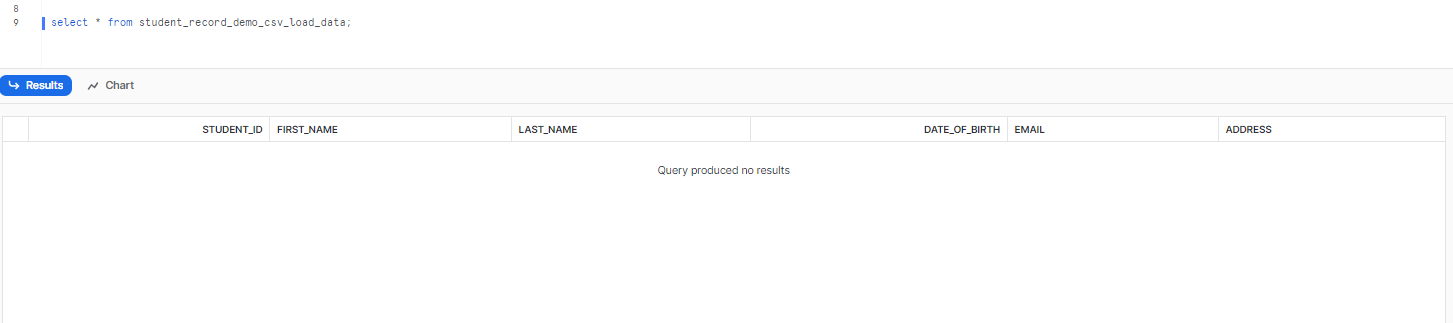

Step 11: Now that you have created the file format, let's check the table to see if there is any data. If the console displays "query produced no result", it means there is no data in the table.

select * from student_record_demo_csv_load_data;

Step 12: Finally, to load the data from the stage into a Snowflake table you can use the COPY INTO command. Choose the appropriate command based on your requirements:

- To load data as it is organized in the CSV file:

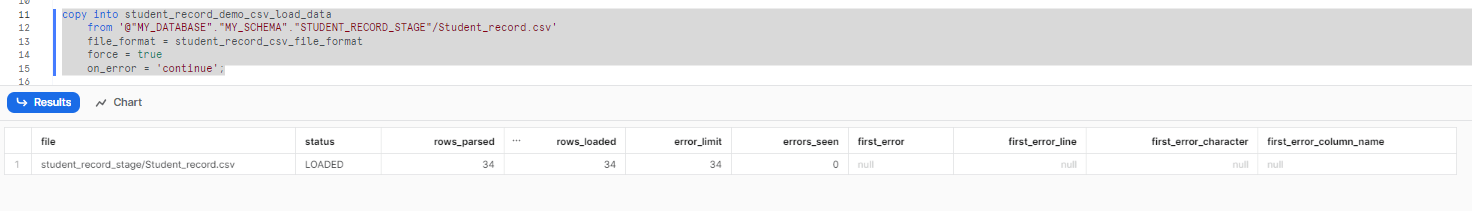

copy into student_record_demo_csv_load_data

from '@"MY_DATABASE"."MY_SCHEMA"."STUDENT_RECORD_STAGE"/Student_record.csv'

file_format = student_record_csv_file_format

force = true

on_error = 'continue';

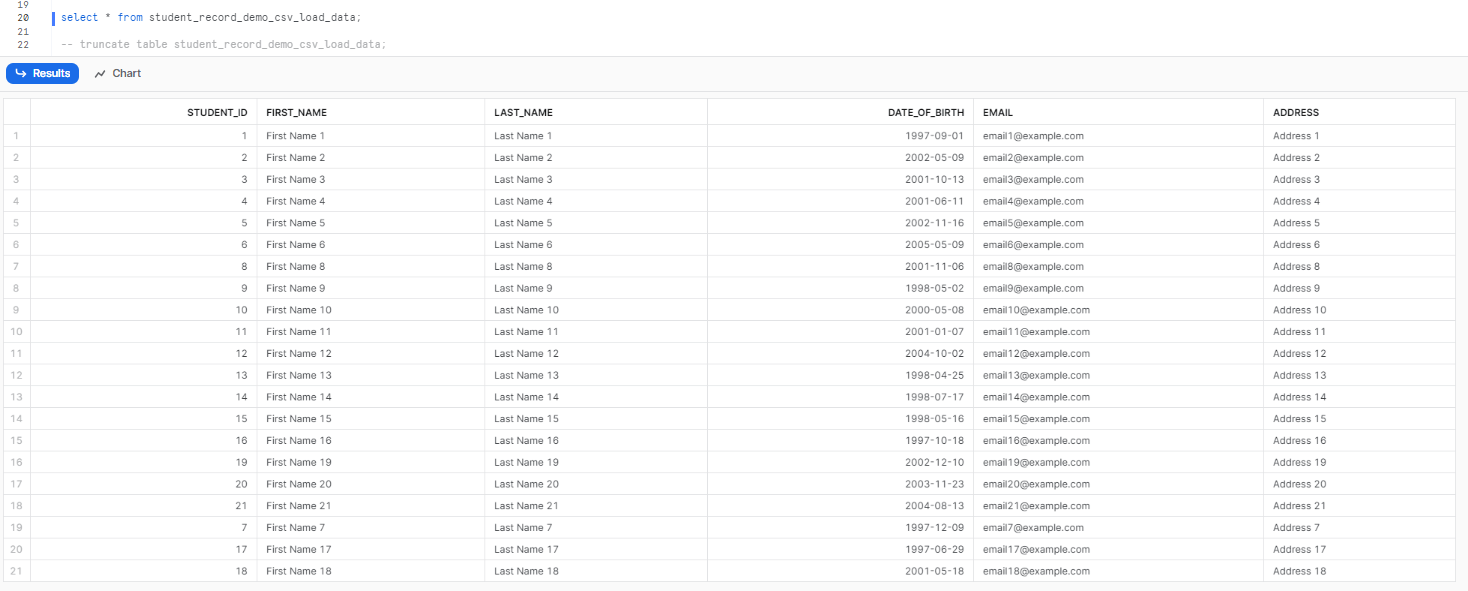

Step 13: Now, if you select everything from the table, you will be able to see all of your data. However, the issue of duplication still persists. In the next step, we will address this problem.

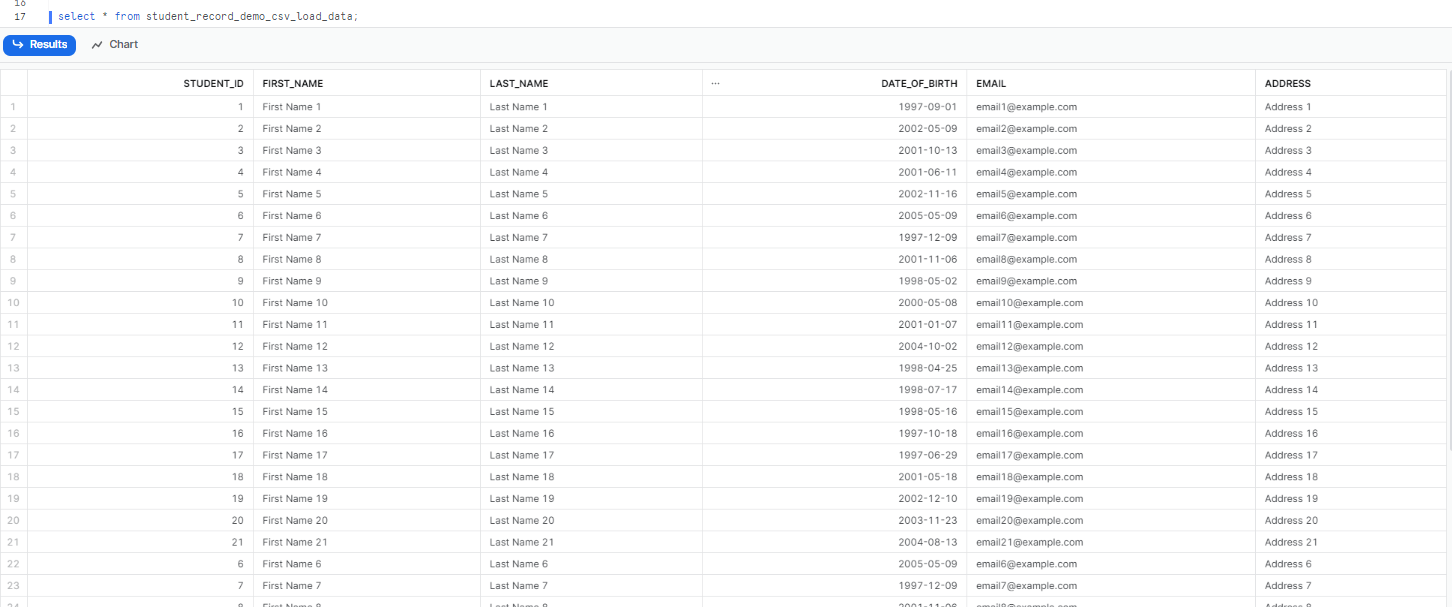

select * from student_record_demo_csv_load_data;

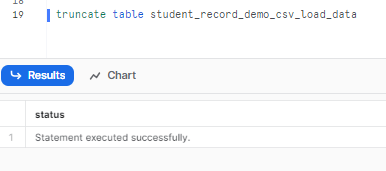

Step 14: Truncate the table to remove the entire record from the table.

truncate table student_record_demo_csv_load_data;

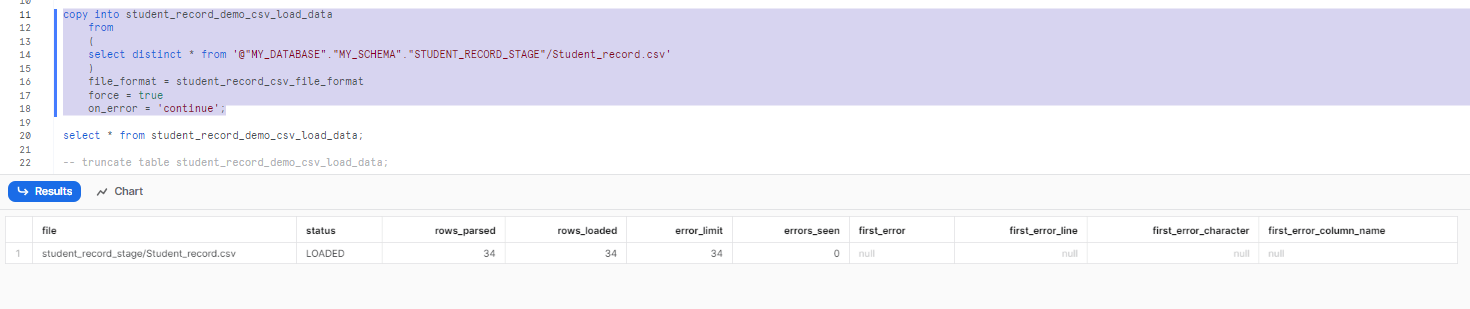

Step 15: To remove the duplicates while copying the data from the CSV file to the Snowflake table, execute the following query:

copy into student_record_demo_csv_load_data

from

(

select distinct * from '@"MY_DATABASE"."MY_SCHEMA"."STUDENT_RECORD_STAGE"/Student_record.csv'

)

file_format = student_record_csv_file_format

force = true

on_error = 'continue';

This particular query removes duplicates by selecting distinct records from the CSV file and then copies the refined data into the designated Snowflake table.

select * from student_record_demo_csv_load_data;

Congratulations! As you can see, there are no more duplicate data entries. Just like this, you can effortlessly delete duplicate data from your dataset using the provided method. It's a simple and effective way to ensure data integrity and accuracy.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

In conclusion, getting rid of duplicate data in Snowflake is a lot like gardening. You have to find the "weeds" (duplicates) and use the right tools to get rid of them. To address this issue, we explored methods such as using the DISTINCT keyword, the Snowflake ROW_NUMBER() function, and the Snowflake SWAP WITH command to identify and eliminate duplicates in Snowflake. So by carefully following these strategies, you can make sure your data garden remains healthy and productive.

Remember, it's easier to stop weeds from growing in the first place, so take steps to keep your data tidy from the start. Now you have the knowledge to keep your Snowflake data clean, making it a great source for all your data analysis needs.

FAQs

What is duplicate data?

Duplicate data are records that contain the same or similar information. They can occur in Snowflake tables for a variety of reasons, such as human error, data entry errors, or system failures.

Why is it important to identify and delete duplicate data?

Duplicate data can cause a number of problems, including:

- Inaccuracy: Duplicate data can lead to inaccurate reports and analyses.

- Wasted space: Duplicate data can take up unnecessary space in Snowflake tables.

- Performance issues: Duplicate data can slow down queries and operations.

- Data security risks: Duplicate data can increase the risk of data breaches and other security incidents.

What is duplicate data in Snowflake?

Duplicate data refers to multiple copies of the same data row or record existing within Snowflake tables or datasets, which can reduce data accuracy and reliability.

What are the impacts of duplicate data in Snowflake?

Duplicate data can lead to inaccuracies in data analysis and reporting. It can also consume unnecessary storage space, leading to increased costs and inefficient data management.

Does Snowflake support unique, primary, and foreign keys?

Yes, Snowflake supports unique, primary, and foreign keys. However, it does not enforce these constraints (except for NOT NULL constraints), which can lead to the insertion of duplicate rows.

How can you delete duplicate data in Snowflake?

You can remove duplicate data in Snowflake using methods like the DISTINCT keyword, ROW_NUMBER() function, SWAP WITH command, OVERWRITE command, or leveraging third-party data quality tools.

How can you prevent duplicate data from occurring in Snowflake?

You can prevent duplicate data by standardizing data entry, implementing validation rules and constraints, assigning unique identifiers, setting up automated data cleaning processes, and conducting regular data audits.

Can third-party tools help with duplicate data management in Snowflake?

Yes, third-party tools like Talend, Dataiku, FirstEigen, and Trifacta can provide enhanced capabilities and flexibility for identifying and eliminating duplicate data in Snowflake.

Is there a way to remove duplicates while loading data from a CSV file into Snowflake?

Yes, you can use the COPY INTO command with the DISTINCT keyword to select distinct records from the CSV file and load them into the Snowflake table, effectively removing duplicates during the load process.