Databricks Runtime (DBR) is like the engine that drives your Databricks cluster's brainpower. It's built around Apache Spark plus a bunch of other tools for data processing, file systems, and other supporting technologies, all packaged together neatly.

In this article, we will cover everything you need to know about Databricks Runtime in detail, covering its core components, and key features. On top of that, we'll also explore the different Databricks Runtime versions, including their features, updates, and support lifecycles. Plus, we'll walk you through how to upgrade to the latest version.

What is Databricks Runtime?

Databricks Runtime (DBR) is a foundational set of software components that operates on the compute clusters managed by Databricks. It is built on top of a highly optimized version of Apache Spark but also adds a number of enhancements and additional components and updates that substantially improve the usability, performance, and security of big data analytics.

So, what's inside Databricks Runtime? Here are its key features and main components:

1) Optimized Apache Spark: Apache Spark is the powerhouse behind Databricks Runtime. It's a robust framework for big data processing and analytics. Every Databricks Runtime version comes with its own Apache Spark version, plus some additional improvements and fixes. Take Databricks Runtime 17.0, for example—it's got Apache Spark version 4.0.0, which means you get a bunch of bug fixes, ML features, and performance boosts.

2) DBIO (Databricks I/O) module: To speed things up, we've got the DBIO (Databricks I/O) module, which optimizes the I/O performance of Spark in the cloud, meaning you can process data faster and more efficiently.

3) DBES (Databricks Enterprise Security): For added security, DBES modules provide features like data encryption, fine-grained access control, and auditing. This guarantees you meet enterprise security standards.

4) Delta Lake: Delta Lake is a storage layer built on top of Apache Spark. This means you get: ACID transactions, scalable metadata handling, unified streaming + batch data processing, and more.

5) MLflow Integration: Databricks Runtime includes MLflow for managing the machine learning lifecycle, including experimentation, reproducibility, and deployment.

6) Rapid Releases and Early Access: Databricks provides quicker release cycles compared to open source releases, offering the latest features and bug fixes to its users early.

7) Pre-installed Libraries: Databricks Runtime has pre-installed Java, Scala, Python, and R libraries. They enable a wide range of data science and machine learning tasks.

8) GPU Support: Databricks Runtime for Machine Learning includes pre-configured GPU libraries to accelerate demanding machine learning and deep learning tasks.

9) Databricks Services Integration: Databricks Runtime works seamlessly with other Databricks services, like notebooks, jobs, and cluster management, making your workflow smoother.

10) Auto-scaling: Databricks clusters, powered by DBR, offer auto-scaling capabilities that automatically adjust compute resources based on workload demand, optimizing operational complexity and cost.

As of the time of writing, the latest version of Databricks Runtime is 17.1. This new version has some useful upgrades and features, including:

- Expanded spatial SQL expressions and GEOMETRY and GEOGRAPHY data types

- Unity Catalog Python User-Defined Table Functions (UDTFs)

- Support for schema and catalog-level default collation

- Shared isolation execution environment for Batch Unity Catalog Python UDFs

- Better handling of JSON options with VARIANT

- display() supports Streaming Real-Time Mode

- Rate source supports Streaming Real-Time Mode

- Event-time timers supported for time-based windows

- Scalar Python UDFs support service credentials

- Improved schema listing

… and so much more!

These continuous updates keep Databricks Runtime at the cutting edge of big data and machine learning.

Save up to 50% on your Databricks spend in a few minutes!

Databricks Runtime Versions—Features, Updates, Support Lifecycle

Databricks is always pushing out fresh versions of its runtime to bring you new features, better performance, and tighter security. To get the most from your Databricks setup, you need to know how the different versions work and their status. We have a list of all the recent Databricks Runtime versions. It includes their types, what Apache Spark version they use, when they came out, and when support will end.

Databricks Runtime 17.3 LTS

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 4.0.0

- Release Date: Oct 22, 2025

- End of Support (EOS) Date: Oct 22, 2028

Databricks Runtime 17.2

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 4.0.0

- Release Date: Sep 16, 2025

- End of Support (EOS) Date: Mar 26, 2026

Databricks Runtime 17.1

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 4.0.0

- Release Date: Aug 1, 2025

- End of Support (EOS) Date: Feb 1, 2026

Databricks Runtime 17.0

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 4.0.0

- Release Date: Jun 24, 2025

- End of Support (EOS) Date: Nov 20, 2025

Databricks Runtime 16.4 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.5.2

- Release Date: May 9, 2025

- End of Support (EOS) Date: May 9, 2028.

Databricks Runtime 16.3

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Mar 31, 2025

- End of Support (EOS) Date: Sep 1, 2025.

Databricks Runtime 16.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.2

- Release Date: Feb 5, 2025

- End of Support (EOS) Date: Aug 5, 2025.

Databricks Runtime 16.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Dec 20, 2024

- End of Support (EOS) Date: Jun 20, 2025.

Databricks Runtime 16.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Nov 11, 2024

- End of Support (EOS) Date: May 11, 2025.

Databricks Runtime 15.4 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Aug 19, 2024

- End of Support (EOS) Date: Aug 19, 2027.

Databricks Runtime 15.3 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Jun 24, 2024

- End of Support (EOS) Date: Jan 7, 2025.

Databricks Runtime 15.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: May 22, 2024

- End of Support (EOS) Date: Jan 7, 2025.

Databricks Runtime 15.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Apr 30, 2024

- End of Support (EOS) Date: Oct 30, 2024.

Databricks Runtime 15.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Mar 22, 2024

- End of Support (EOS) Date: May 31, 2024.

Databricks Runtime 14.3 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Feb 1, 2024

- End of Support (EOS) Date: Feb 1, 2027.

Databricks Runtime 14.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Nov 22, 2023

- End of Support (EOS) Date: Nov 5, 2024.

Databricks Runtime 14.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Oct 11, 2023

- End of Support (EOS) Date: Feb 12, 2025.

Databricks Runtime 14.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.5.0

- Release Date: Sep 11, 2023

- End of Support (EOS) Date: Mar 11, 2024.

Databricks Runtime 13.3 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.4.1

- Release Date: Aug 22, 2023

- End of Support (EOS) Date: Aug 22, 2026.

Databricks Runtime 13.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.4.0

- Release Date: Jul 6, 2023

- End of Support (EOS) Date: Jan 6, 2024.

Databricks Runtime 13.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.4.0

- Release Date: Jun 1, 2023

- End of Support (EOS) Date: Dec 1, 2023.

Databricks Runtime 13.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.4.0

- Release Date: Apr 14, 2023

- End of Support (EOS) Date: Oct 14, 2023.

Databricks Runtime 12.2 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.3.2

- Release Date: Mar 1, 2023

- End of Support (EOS) Date: Mar 1, 2026.

Databricks Runtime 12.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.3.1

- Release Date: Jan 18, 2023

- End of Support (EOS) Date: Jul 18, 2023.

Databricks Runtime 12.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.3.1

- Release Date: Dec 14, 2022

- End of Support (EOS) Date: Jun 15, 2023.

Databricks Runtime 11.3 LTS

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.3.0

- Release Date: Oct 19, 2022

- End of Support (EOS) Date: Oct 19, 2025.

Databricks Runtime 11.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.3.0

- Release Date: Sep 7, 2022

- End of Support (EOS) Date: Mar 7, 2023.

Databricks Runtime 11.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.3.0

- Release Date: Jul 27, 2022

- End of Support (EOS) Date: Jan 27, 2023.

Databricks Runtime 11.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.3.0

- Release Date: Jun 16, 2022

- End of Support (EOS) Date: Dec 16, 2022.

Databricks Runtime 10.5 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.2.1

- Release Date: May 4, 2022

- End of Support (EOS) Date: Nov 4, 2022.

Databricks Runtime 10.4 (EoS) ❌

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.2.1

- Release Date: Mar 18, 2022

- End of Support (EOS) Date: Mar 18, 2025.

Databricks Runtime 10.3 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.2.1

- Release Date: Feb 2, 2022

- End of Support (EOS) Date: Aug 2, 2022.

Databricks Runtime 10.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.2.0

- Release Date: Dec 22, 2021

- End of Support (EOS) Date: Jun 22, 2022.

Databricks Runtime 10.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.2.0

- Release Date: Nov 10, 2021

- End of Support (EOS) Date: Jun 14, 2022.

Databricks Runtime 10.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.2.0

- Release Date: Oct 20, 2021

- End of Support (EOS) Date: Apr 20, 2022.

Databricks Runtime 9.1 LTS ❌

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.1.2

- Release Date: Sep 23, 2021

- End of Support (EOS) Date: Dec 19, 2024.

Databricks Runtime 9.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.2

- Release Date: Aug 17, 2021

- End of Support (EOS) Date: Feb 17, 2022.

Databricks Runtime 8.4 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.2

- Release Date: Jul 20, 2021

- End of Support (EOS) Date: Jan 20, 2022.

Databricks Runtime 8.3 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.1

- Release Date: Jun 8, 2021

- End of Support (EOS) Date: Jan 20, 2022.

Databricks Runtime 8.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.1

- Release Date: Apr 22, 2021

- End of Support (EOS) Date: Oct 22, 2021.

Databricks Runtime 8.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.1

- Release Date: Mar 22, 2021

- End of Support (EOS) Date: Sep 22, 2021.

Databricks Runtime 8.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.1.1

- Release Date: Mar 2, 2021

- End of Support (EOS) Date: Sep 2, 2021.

Databricks Runtime 7.6 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.1

- Release Date: Feb 8, 2021

- End of Support (EOS) Date: Aug 8, 2021.

Databricks Runtime 7.5 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.1

- Release Date: Dec 16, 2020

- End of Support (EOS) Date: Jun 16, 2021.

Databricks Runtime 7.4 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.0

- Release Date: Nov 3, 2020

- End of Support (EOS) Date: May 3, 2021.

Databricks Runtime 7.3 (EoS / LTS variants) ❌

- Editions/Types: LTS; LTS for Machine Learning

- Apache Spark Version: 3.0.1

- Release Date: Sep 24, 2020

- End of Support (EOS) Date: Sep 24, 2023.

Databricks Runtime 7.2 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.x

- Release Date: (see Databricks archive)

- End of Support (EOS) Date: (see Databricks archive).

Databricks Runtime 7.1 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.x

- Release Date: (see Databricks archive)

- End of Support (EOS) Date: (see Databricks archive).

Databricks Runtime 7.0 (EoS) ❌

- Editions/Types: Standard; for Machine Learning

- Apache Spark Version: 3.0.x

- Release Date: June 2020

Databricks Runtime 6.x and earlier (6.6, 6.5, 6.4, 6.3, 6.2, 6.1, 6.0, etc.) ❌

- Editions/Types:

- Apache Spark Version: 2.x to 3.0 family depending on release

Databricks Runtime 5.x and earlier (5.5, 5.4, 5.3, 5.2, etc.) ❌

- Editions/Types:

- Apache Spark Version: 2.x family

Databricks Runtime 4.x, 3.x and older historical releases

- Editions/Types:

- Apache Spark Version: typically 1.x to 2.x depending on release

What is LTS in Databricks Runtime?

LTS stands for Long-Term Support in the context of Databricks Runtime. LTS versions are specially designated releases that receive extended support and maintenance from Databricks. These versions are designed for customers who prioritize stability and predictability in their data infrastructure.

Here are the benefits of Long-Term support versions:

- Long-term Support: You get support for two to three whole years, which is way longer than the one year you get with standard/regular releases.

- Stable: LTS versions are super stable because they're put through extra testing. That makes them perfect for production environments.

- Easily Predictable Update/Release Cycle: With Long-Term Support, you know exactly when you'll need to upgrade, so you can plan ahead and avoid constant major changes.

- Frequent Security Updates: LTS versions get critical bug fixes and security patches during their extended support period.

So, what's the main difference between LTS and regular releases? It's all about how you plan to use them and how long you'll get support. Regular releases bring new features faster, but you'll get updates more often and support won't last as long. They're perfect for anyone who wants the latest and greatest and don't mind updating frequently. LTS versions are better for organizations that need stability and support for a longer time, even if it means they won't get the newest features right away.

How to Upgrade Databricks Runtime to the Latest 17.x Version?

Want the latest features, improved performance, and better security? Upgrade your Databricks Runtime to the newest version. Here's how to do it in a few easy steps:

Step 1—Review Release Notes and Compatibility

First and foremost, don't migrate until you've reviewed the release notes and compatibility guides for both Databricks Runtime x.x (current version) and 17.x. Take a close look at the new features, improvements, and any changes in 17.x that might affect your workflow.

See Databricks Runtime release notes versions and compatibility

Step 2—Login to Databricks

Start by opening your web browser and heading to your Databricks workspace URL. Next, log in to your Databricks account with your credentials.

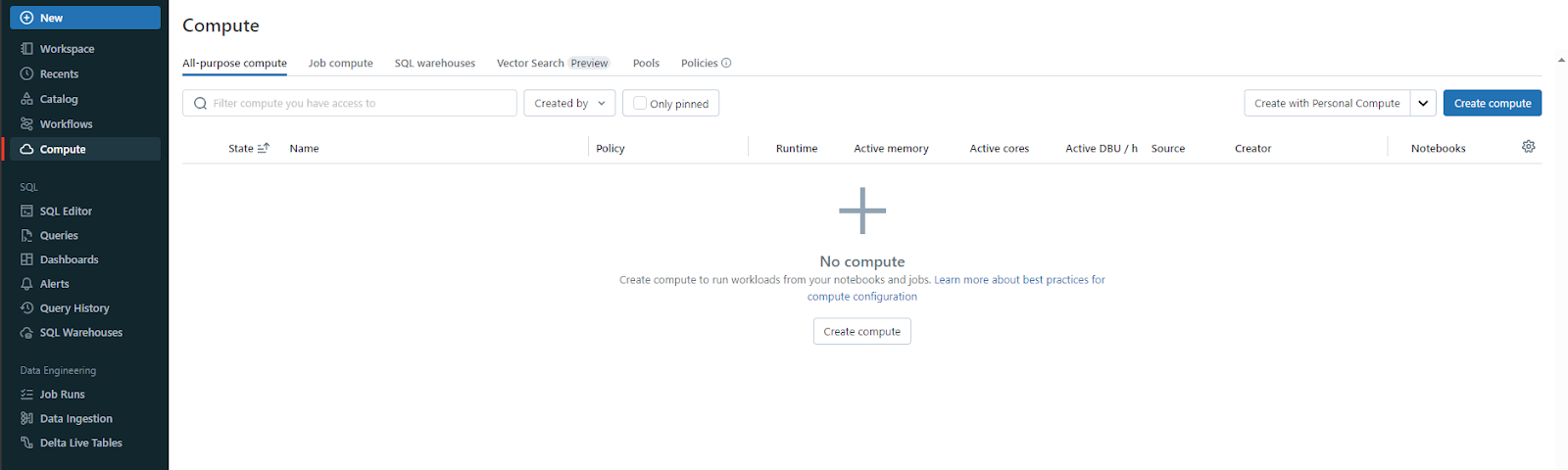

Step 3—Access the Databricks Cluster Page

Once logged in, head over to the Compute page in your Databricks workspace. You'll land on the Databricks Clusters page, where you'll see a few tabs. Hit the All-Purpose compute tab. Now you'll see a list of your existing cluster resources, their status, and details. Look to the top right corner—there's a "Create Compute" button. Click it to start setting up a brand new isolated Spark environment.

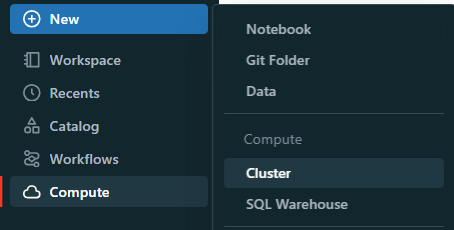

You can get to the same place to create a new cluster by clicking +New ➤ Cluster or using the above option. Either way, you'll end up on the New Databricks Cluster page.

Step 4—Select or Create a Databricks Cluster

If you're upgrading a cluster, find and select it from the list. If you're creating a new cluster with the latest runtime, click “Create Compute”.

See Step-By-Step Guide to Create Databricks Cluster

Step 5—Create a Test/Dev Environment (Not in Prod)

Set up a development/test environment with a cluster running Databricks Runtime 17.x. This way, you can test your workflows, libraries, and dependencies in the new runtime without messing with your production environment.

Step 6—Configure Cluster for Databricks Runtime 17.x

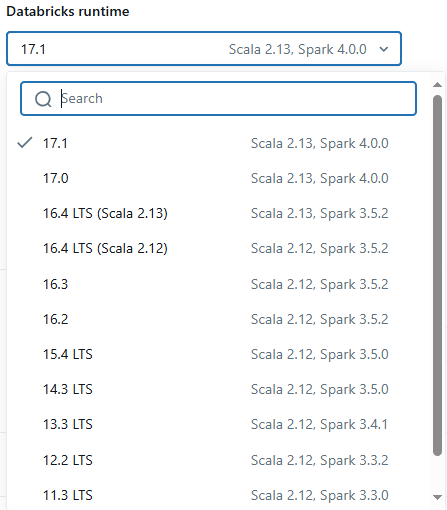

Head to the cluster configuration page and find the “Databricks Runtime Version” dropdown. Pick the 17.x version you want—like 17.1 or 17.0.

Step 7—Apply and Restart Cluster

Pick your new runtime version and make any other changes you need to. Then, hit Edit ➤ Apply Changes / Confirm.

If you're updating an existing cluster, you'll need to restart it to make the changes count. Just click "Restart" to do that. For a brand new cluster, click "Create Cluster" to get it started with the new runtime.

Step 8—Verify the Upgrade

After your cluster is up and running, double-check that it's got the right Databricks Runtime version. To do that, head to the configuration page and look for the "Databricks Runtime Version" field. Or, you can run a simple notebook cell and type in the following simple command to print the Databricks Runtime version:

import os

os.environ["DATABRICKS_RUNTIME_VERSION"]This should output the current Databricks Runtime version.

Step 9—Update Libraries and Dependencies

Make sure the libraries and dependencies in your workflows work with Databricks Runtime 17.x. You might need to update them to the latest versions or find new ones that work.

Step 10—Validate Workflows and Jobs

Test your current workflows and jobs in the dev environment to make sure they're working as they should with Databricks Runtime 17.x. Keep an eye out for any issues/errors or performance issues and fix them as needed.

Next, run your ETL pipelines, data processing scripts, and machine learning models. Check the output and performance to see how they're doing. Also, take a look at the log files for any warnings or errors that might have popped up.

Step 11—Migrate to Production

Now that you've tested the migration in development, it's time to update production to Databricks Runtime 17.x. Just follow the same steps you did in development, but this time with your production clusters and workflows. Make sure to back up all your critical data and settings—you don't want to lose anything!

Next, update the runtime version for your production clusters. Finally, roll out the update bit by bit to avoid downtime.

Step 12—Monitor and Optimize

After the migration, closely monitor the performance and stability of your clusters and workflows. Use Databricks observability tools like Chaos Genius to track resource usage, performance, and any potential issues.

Want to see what Chaos Genius can do? Give it a shot—it's free!

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Further Reading

If you want to get more info about Databricks Runtime, here are some great resources:

- Databricks Runtime release notes versions and compatibility

- Databricks Clusters 101

- Databricks Workspaces 101

Conclusion

And that’s a wrap! Databricks Runtime is the set of software artifacts that run on the clusters of machines managed by Databricks. It includes Apache Spark. But, it also adds a number of components and updates that substantially improve the usability, performance, and security of big data analytics. Databricks Runtime comes in many versions, each with its own set of features. It's crucial to know what each version offers so you can pick the right one for your data project. If you can wrap your head around how the runtime versions work and how to upgrade, you'll unlock the full power of Databricks for your projects.

In this article, we have covered:

- What is Databricks Runtime?

- Databricks Runtime Versions—Features, Updates, Support Lifecycle

- What is LTS in Databricks Runtime?

- How to Upgrade Databricks Runtime to the Latest 17.x Version?

… and more!

FAQs

What is Databricks Runtime?

Databricks Runtime is a set of software artifacts that run on Databricks clusters. It includes Apache Spark and additional components that enhance performance, usability, and security for big data analytics.

What is the purpose of LTS versions?

LTS versions are maintained for a longer period, which provides stability and extended support for critical production environments.

What components are included in Databricks Runtime?

Beyond Apache Spark, DBR includes components like Databricks I/O (DBIO) for enhanced performance and leverages Unity Catalog and other platform features for security and reduced operational complexity.

How does Databricks Runtime improve performance?

Through optimizations like the Databricks I/O module (DBIO), which significantly enhances the performance of Spark in cloud environments.

What security features does Databricks Runtime offer?

Databricks Runtime benefits from the comprehensive security features of the Databricks Lakehouse Platform, including Unity Catalog, which provides data encryption, fine-grained access control, auditing, and compliance with standards such as HIPAA and SOC2.

How often are new Databricks Runtime versions released?

Databricks frequently releases new versions, offering rapid access to the latest features and bug fixes ahead of open source releases.

Can I upgrade my Databricks Runtime version?

Yes, you can upgrade to newer versions to take advantage of improvements and new features. The process typically involves restarting the cluster with the new runtime version.

What is the role of Apache Spark in Databricks Runtime?

Apache Spark is the core engine for large-scale data processing, and Databricks Runtime builds upon it with additional enhancements.

How does Databricks Runtime handle compatibility?

Each runtime version is compatible with specific versions of Spark, Python, Java, and other libraries, ensuring a stable and tested environment.

What are some common use cases for Databricks Runtime?

Common use cases include data engineering, machine learning, real-time analytics, and big data processing.

How does Databricks Runtime support machine learning?

It includes specialized versions like Databricks Runtime for Machine Learning, which comes with pre-installed ML libraries and tools.

What operating systems does Databricks Runtime support?

Databricks Runtime operates on optimized Linux distributions (such as Ubuntu LTS) within the Databricks-managed environment. Users typically do not directly manage the underlying operating system.

Can I customize the environment in Databricks Runtime?

Yes, users can install additional libraries and packages to tailor the runtime environment to their specific needs.

Does Databricks Runtime include Apache Spark updates?

Yes, each Databricks Runtime version comes with its own Apache Spark version, plus additional improvements and fixes.