Companies/businesses nowadays have tons of data to handle, and moving it from one location or region to another can get pretty expensive. Snowflake—a popular cloud-based data platform—provides excellent capabilities like high scalability, data storage, compute, low latency, advanced analytics and flexible pay-per-use pricing—but it's important to understand their data transfer costs to avoid any budget surprises. Let's take a look at Snowflake data transfer costs, so you can make wise selections and keep your spending under control.

In this article, we'll explain Snowflake's data transfer costs, including data ingress and egress fees, how they vary depending on cloud providers and regions, and how to optimize Snowflake costs and maximize ROI.

Does Snowflake Charge for Data Transfer?

Snowflake calculates data transfer costs by considering criteria such as data size, transfer rate, and the region or cloud provider from where the data is being transferred. Before tackling how to reduce these data transfer costs, it is important to understand how Snowflake calculates them.

Snowflake data transfer cost structure depends on two factors: ingress and egress.

Data Ingress vs. Data Egress

Ingress, meaning transferring the data into Snowflake, is free of charge. Data egress, however, or sending the data from Snowflake to another region or cloud platform incurs a certain fee. If you transfer your data within the same region, it's usually free. When transferring externally via external tables, functions, or Data Lake Exports, though, there are per-byte charges associated that can vary among different cloud providers.

Take a look at some Snowflake pricing tables for comparison to get an idea of what the difference in cost could look like:

1) Snowflake Data Transfer Costs in AWS

Here is the pricing breakdown of Snowflake data transfer costs in AWS platform:

| Provider | Data Transfer Source Region | Data Transfer To Same Cloud Provider, Same Region (per TB) | Data Transfer To Same Cloud Provider, Different Region (per TB) | Data Transfer To Different Cloud Provider or Internet (per TB) |

|---|---|---|---|---|

| AWS | US East (Northern Virginia) | $0.00 | $20.00 | $90.00 |

| AWS | US West (Oregon) | $0.00 | $20.00 | $90.00 |

| AWS | EU Dublin | $0.00 | $20.00 | $90.00 |

| AWS | EU Frankfurt | $0.00 | $20.00 | $90.00 |

| AWS | AP Sydney | $0.00 | $140.00 | $140.00 |

| AWS | AP Singapore | $0.00 | $90.00 | $120.00 |

| AWS | Canada Central | $0.00 | $20.00 | $90.00 |

| AWS | US East 2 (Ohio) | $0.00 | $20.00 | $90.00 |

| AWS | AP Northeast 1 (Tokyo) | $0.00 | $90.00 | $114.00 |

| AWS | AP Mumbai | $0.00 | $86.00 | $109.30 |

| AWS | US East 1 Commercial Gov | $0.00 | $20.00 | $90.00 |

| AWS | Europe (London) | $0.00 | $20.00 | $90.00 |

| AWS | Asia Pacific (Seoul) | $0.00 | $80.00 | $126.00 |

| AWS | US Gov West 1 | $0.00 | $30.00 | $155.00 |

| AWS | US Gov West 1 (Fedramp High Plus) | $0.00 | $30.00 | $155.00 |

| AWS | Europe (Stockholm) | $0.00 | $20.00 | $90.00 |

| AWS | Asia Pacific (Osaka) | $0.00 | $90.00 | $114.00 |

| AWS | South America East 1 (São Paulo) | $0.00 | $138.00 | $150.00 |

| AWS | EU (Paris) | $0.00 | $20.00 | $90.00 |

| AWS | Asia Pacific (Jakarta) | $0.00 | $100.00 | $132.00 |

| AWS | US Gov East 1 (Fedramp High Plus) | $0.00 | $30.00 | $155.00 |

| AWS | EU (Zurich) | $0.00 | $20.00 | $90.00 |

| AWS | US Gov West 1 (DoD)12 | $0.00 | $30.00 | $155.00 |

2) Snowflake Data Transfer Costs in Microsoft Azure

Here is the pricing breakdown of Snowflake data transfer costs in Azure platform:

| Cloud Provider | Data Transfer Source Region | Same Region | Same Continent | Different Continent | Different Cloud Provider or Internet |

|---|---|---|---|---|---|

| Azure | East US 2 (Virginia) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | West US 2 (Washington) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | West Europe (Netherlands) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | Australia East (New South Wales) | $0.00 | $80.00 | $80.00 | $120.00 |

| Azure | Canada Central (Toronto) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | Southeast Asia (Singapore) | $0.00 | $80.00 | $80.00 | $120.00 |

| Azure | Switzerland North | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | US Gov Virginia | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | US Central (Iowa) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | North Europe (Ireland) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | Japan East (Tokyo) | $0.00 | $80.00 | $80.00 | $120.00 |

| Azure | UAE North (Dubai) | $0.00 | $80.00 | $80.00 | $120.00 |

| Azure | South Central US (Texas) | $0.00 | $20.00 | $50.00 | $87.50 |

| Azure | Central India (Pune) | $0.00 | $80.00 | $80.00 | $120.00 |

| Azure | UK South (London) | $0.00 | $20.00 | $50.00 | $87.50 |

3) Snowflake Data Transfer Costs in GCP

Here is the pricing breakdown of Snowflake data transfer costs in GCP platform:

| Cloud Provider | Data Transfer Source Region | [Same Cloud Provider] Same Region | [Same Cloud Provider] Different Region, Same Continent | [Same Cloud Provider] Different Continent (excludes Oceania) | [Same Cloud Provider] Oceania | [Different Cloud Provider] Same Continent | [Different Cloud Provider] Different Continent (excludes Oceania) | [Different Cloud Provider] Oceania |

|---|---|---|---|---|---|---|---|---|

| GCP | US Central 1 (Iowa) | $0.00 | $10.00 | $80.00 | $150.00 | $120.00 | $120.00 | $190.00 |

| GCP | US East 4 (N. Virginia) | $0.00 | $10.00 | $80.00 | $150.00 | $120.00 | $120.00 | $190.00 |

| GCP | Europe West 4 (Netherlands) | $0.00 | $20.00 | $80.00 | $150.00 | $120.00 | $120.00 | $190.00 |

| GCP | Europe West 2 (London) | $0.00 | $20.00 | $80.00 | $150.00 | $120.00 | $120.00 | $190.00 |

Save up to 30% on your Snowflake spend in a few minutes!

Snowflake Features that Triggers Data Transfer Costs

Transferring data from a Snowflake account to a different region within the same cloud platform or to a different cloud platform using certain Snowflake features comes with Snowflake data transfer costs. These costs may be triggered by several activities, such as:

1) Unloading Data in Snowflake

Unloading data in Snowflake is the process of extracting information from a database and exporting it to an external file or storage system such as Amazon S3, Azure Blob or Google Cloud Storage. This allows users to make the extracted data available for use in other applications, whether for analytics, reporting, or visualizations. Unloading can be done manually or automatically; however, it's important to consider factors such as data backup frequency, data volume, storage, and transfer costs first.

To unload data from Snowflake to another cloud storage provider, the COPY INTO <location> command is used. This requires setting up a stage associated with certain costs. It's possible to unload the data to a location different from where the Snowflake account is hosted. The complete syntax for the command is as follows:

COPY INTO { internalStage | externalStage | externalLocation }

FROM { [<namespace>.]<table_name> | ( <query> ) }

[ PARTITION BY <expr> ]

[ FILE_FORMAT = ( { FORMAT_NAME = '[<namespace>.]<file_format_name>' |

TYPE = { CSV | JSON | PARQUET } [ formatTypeOptions ] } ) ]

[ copyOptions ]

[ VALIDATION_MODE = RETURN_ROWS ]

[ HEADER ]Let’s chunk down this syntax line by line to understand it better.

To indicate where the data will be unloaded, use the COPY INTO command followed by the stage location, which can be internal or external. For instance:

Internal or external stage: COPY INTO @your-stage/your_data.csv

Google Cloud Bucket: COPY INTO 'gcs://bucket-name/your_folder/'

Amazon S3: COPY INTO 's3://bucket-name/your_folder/'

Azure: COPY INTO `azure://account.blob.core.windows.net/bucket-name/your_folder/'The FROM clause specifies the source of the data, which can be a table or a query. For example:

Copy entire table: FROM SNOWFLAKE_SAMPLE_DATA.USERS

Copy using query: FROM (SELECT USER_ID, USER_FIRST_NAME, USER_LAST_NAME, USER_EMAIL FROM SNOWFLAKE_SAMPLE_DATA.USERS)When unloading to an external storage location, you need to specify the STORAGE_INTEGRATION parameter, which contains authorization for Snowflake to write data to the external location.

The PARTITION BY parameter splits the data into separate files based on a string input, while the FILE_FORMAT parameter sets the file type and compression. The copyOptions parameter includes optional parameters that can be used to further customize the results of the COPY INTO command.

To ensure data consistency, consider creating a named file format where you can define file type, compression, and other parameters upfront. The VALIDATION_MODE parameter is used to return the rows instead of writing to your destination, allowing you to confirm that you have the correct data before unloading it.

Lastly, the HEADER parameter specifies whether you want the data to include headers.

Example query:

COPY INTO 's3://bucket-name/your_folder/'

FROM (SELECT USER_ID, USER_FIRST_NAME, USER_LAST_NAME, USER_EMAIL FROM SNOWFLAKE_SAMPLE_DATA.USERS)

STORAGE_INTEGRATION = YOUR_INTEGRATION

PARTITION BY LEFT(USER_LAST_NAME, 1)

FILE_FORMAT = (TYPE = 'CSV' COMPRESSION = 'AUTO')

DETAILED_OUTPUT = TRUE

HEADER = TRUE;Note: Always ensure that the data has been unloaded successfully at the destination and take appropriate measures to prevent any potential data leaks.

2) Replicating Data in Snowflake

Replicating data is the process of creating a copy of data and storing it in a separate location. This process can be highly valuable for disaster recovery or for providing read-only access for reporting purposes. There are different methods of database replication that can be used, such as taking an up-to-date data snapshot of the primary database and copying it to the secondary database.

For Snowflake accounts, replication can involve copying data to another Snowflake account hosted on a different platform or region than the origin account. This approach helps ensure data availability, even in case of an outage or other issues with the primary account. Also, replicating data to different locations can bring benefits like lower latency and improved performance for users accessing data from various regions worldwide.

3) Writing external functions in Snowflake

External functions in Snowflake refer to user-defined functions that are not stored within the Snowflake and are instead executed outside of it. This feature allows users to easily access external application programming interfaces (APIs) and services, including geocoders, machine learning models, and custom code that may be running outside the Snowflake environment. External functions eliminate the need to export and re-import data when accessing third-party services, significantly simplifying data pipelines. This feature streamlines the data flow by allowing Snowflake to access data from external sources and then process it within the Snowflake environment. External functions can also enhance Snowflake's capabilities by integrating with external services, such as machine learning models or other complex algorithms, to perform more advanced data analysis. This integration is made possible by allowing Snowflake to send data to an external service, receive the results, and then process them within the Snowflake environment.

We will delve deeper into this topic in our upcoming article, but in the meantime, you can refer to this documentation to know more about it.

How to Estimate Snowflake Data Transfer Costs?

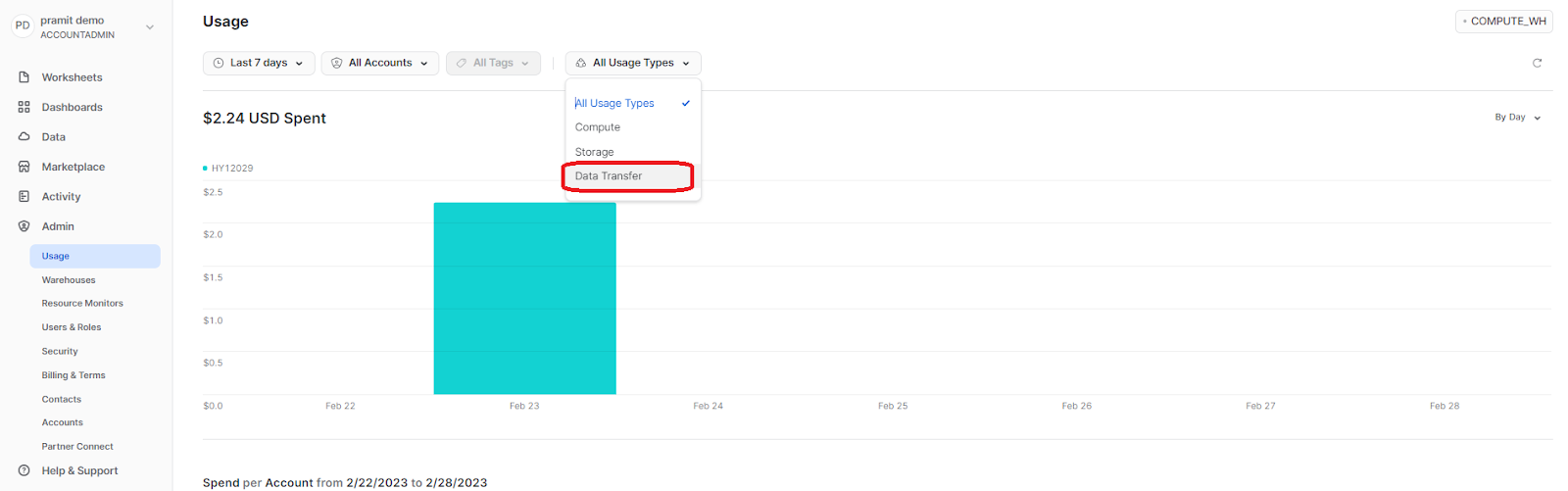

By now, you may be aware that importing data into your Snowflake account is free of cost, but there is a per-byte fee to transfer data across regions on the same cloud platform or to a different cloud platform. To gain a more thorough understanding of historical data transfer costs, users can make use of Snowsight, the Snowflake web interface, or execute queries against the ACCOUNT_USAGE and ORGANIZATION_USAGE schemas. Snowsight provides a visual dashboard for obtaining cost overviews quickly. If you require additional in-depth information, you may run the queries against usage views to analyze cost data in greater depth and even generate custom reports/dashboards.

How to Access Snowflake Data Transfer Costs?

In Snowflake, only the account administrator (a user with the ACCOUNTADMIN role) has default access to view cost and usage data in Snowsight, ACCOUNT_USAGE schema, and the ORGANIZATION_USAGE schema. However, if you have a USERADMIN role or higher, you can grant access to other users by assigning them SNOWFLAKE database roles.

The following SNOWFLAKE database roles can be used to access cost and usage data:

- USAGE_VIEWER : This role provides access to cost and usage data for a single account in Snowsight and related views in the ACCOUNT_USAGE schema.

- ORGANIZATION_USAGE_VIEWER: If the current account is the ORGADMIN account, this role provides access to cost and usage data for all accounts in Snowsight, along with views in the ORGANIZATION_USAGE schema that are related to cost and usage but not billing.

So, by default, only the account administrator has access to cost and usage data. However, SNOWFLAKE database roles can be used to give other users who need to view and analyze cost and usage data access to the data.

How to View the Overall Snowflake Data Transfer Cost—Using Snowsight?

As we have already mentioned above, the account administrator( a user with the ACCOUNTADMIN role) can only use Snowsight to obtain an overview of the overall cost of using Snowflake for any given day, week, or month.

Here are the steps to use Snowsight to explore the overall cost:

- Navigate to the "Admin" section and select "Usage"

- From the drop-down list, select "All Usage Types."

How to Query Data for Snowflake Data Transfer Cost?

Before we begin querying the data for Snowflake data transfer costs, we must first understand that Snowflake has two schemas for querying data transfer costs: ORGANIZATION_USAGE and ACCOUNT_USAGE. These schemas contain data related to usage and cost and provide very detailed and granular, analytics-ready usage data to build custom reports or even dashboards.

- ORGANIZATION_USAGE schema contains data about usage and costs at the organization level, including storage usage and cost data for all organizational databases and stages in a shared database named SNOWFLAKE. The ORGANIZATION USAGE schema is only accessible to users with the ORGADMIN or ACCOUNTADMIN roles.

- ACCOUNT_USAGE schema contains usage and cost data at the account level, including storage consumption and cost for all tables inside an account.

Most views in the ORGANIZATION_USAGE and ACCOUNT_USAGE schemas show how much it costs to send data based on how much data is transferred. To view cost in currency rather than volume, write queries against the USAGE_IN_CURRENCY_DAILY View. This view transforms the amount of data transferred into a currency cost based on the daily price of transferring a TB.

The table below lists the views that contain usage and data transfer costs information from your Snowflake account to another region or cloud provider. These views can be used to gain insight into the costs associated with data transfer.

| Views | Description |

| ORGANIZATION_USAGE.DATA_TRANSFER_DAILY_HISTORY | Provides the number of bytes transferred on a given day. |

| ORGANIZATION_USAGE.DATA_TRANSFER_HISTORY | Provides the number of bytes transferred, along with the source and target cloud and region, and type of transfer. |

| ACCOUNT_USAGE.DATABASE_REPLICATION_USAGE_HISTORY | Provides the number of bytes transferred and credit consumed during database replication. |

| ORGANIZATION_USAGE.REPLICATION_USAGE_HISTORY | Provides the number of bytes transferred and credits consumed during database replication. |

| ORGANIZATION_USAGE.REPLICATION_GROUP_USAGE_HISTORY | Provides the number of bytes transferred and credits consumed during replication for a specific replication group. |

| ORGANIZATION_USAGE.USAGE_IN_CURRENCY_DAILY | Provides daily data transfer in TB, along with the cost of that usage in the organization's currency. |

The table above shows different Snowflake views about how data transfer is used and how much it costs, along with their descriptions and schema information. With these views, you can get more granular details about how Snowflake data transfer works and how much it costs.

5 Best Practices for Optimizing Snowflake Data Transfer Costs

Looking to save big on Snowflake data transfer costs? First, get familiar with how the platform calculates these costs. But don't stop there! Keep these best practices in mind to cut down your expenses significantly:

1) Understanding Snowflake Data Usage Patterns

Understanding your data usage patterns is one of the most effective ways to optimize Snowflake data transfer costs. By carefully analyzing how you access and use data, you can identify points where reducing transfers will not reduce the data quality. This can help to bring down transfer costs significantly.

For example, If you discover that certain datasets are not being accessed as often, you can reduce the frequency of their data transfers, thereby lowering transfer costs while preserving data integrity. So by utilizing this method, you can efficiently cut down on data transfer costs while preserving the availability of your data.

2) Reducing Unnecessary Snowflake Data Transfer

Reducing unnecessary data transfers is another way to optimize Snowflake data transfer costs. This can be achieved by minimizing data duplication and consolidating/merging datasets where possible. Doing so will help reduce the amount of data you send, keeping your transfer costs low, thus cutting down overall costs.

For example, if you have multiple copies of the same dataset stored in separate places, you can merge those copies to save on data transfer costs. Similarly, if you have datasets that are duplicated across multiple accounts, you can merge those accounts to reduce data transfer costs.

3) Using Snowflake Data Compression and Encryption Features

Snowflake offers built-in data compression and encryption features that can help you reduce data transfer costs. Data compression helps minimize the amount of data that needs to be transferred, while encryption ensures that your data is secure during transit. Encryption can also help you comply with industry regulations and prevent potential data breaches. By leveraging these cost-saving features, users can make sure that their data is transferred safely and securely without breaking the bank.

4) Optimizing your Snowflake data transfer using Snowpipe

Snowpipe is a Snowflake feature that can help you significantly reduce data transfer costs. This one-of-a-kind loading process seamlessly loads streaming data into your Snowflake account, removing the need to preprocess the data before transferring it. Snowpipe also makes use of Snowflake's auto-scaling capability to optimally allocate compute resources as needed to process incoming data – helping you maximize efficiency while still cutting costs.

5) Monitoring your data transfer costs and usage

Keeping a close eye on and monitoring your Snowflake data transfer costs is essential for optimizing performance and managing your budget. Snowflake gives you access to comprehensive reports on data transfers, indicating the amount of data transferred, where it moved from and to, and associated costs. This detailed information can be used to control your costs and change how you move data around.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

And that's a wrap! By now, you should have a solid understanding of the various factors that influence Snowflake's data transfer costs and how to optimize your data movement strategies to keep those expenses in check. Remember, the key to minimizing your Snowflake data transfer costs lies in careful planning and execution. Take the time to analyze your data flows, identify potential bottlenecks, and implement best practices like compression, partitioning, and caching. Don't be afraid to experiment with different approaches and monitor their impact on your costs.

In this article, we've covered:

- Does Snowflake Charge for Data Transfer?

- Data Ingress vs. Data Egress

- Snowflake Data Transfer Costs in AWS

- Snowflake Data Transfer Costs in Azure

- Snowflake Data Transfer Costs in GCP

- Snowflake Features that Triggers Data Transfer Costs

- How to Estimate Snowflake Data Transfer Costs?

- How to Access Snowflake Data Transfer Costs?

- How to View the Overall Snowflake Data Transfer Cost—Using Snowsight?

- How to Query Data for Snowflake Data Transfer Cost?

- 5 Best Practices for Optimizing Snowflake Data Transfer Costs

FAQs

What factors influence the cost of Snowflake data transfer?

Snowflake data transfer cost structure depends on two factors: ingress and egress.

Does Snowflake charge for data transfer?

Yes, Snowflake charges for data egress, which is transferring data out of Snowflake to another region or cloud platform. Data ingress (transferring data into Snowflake) is free of charge.

Is data ingress free in Snowflake?

Yes, transferring data into Snowflake (data ingress) is generally free of charge.

Does Snowflake charge for data ingress?

No, Snowflake does not charge for data ingress (transferring data into Snowflake).

What is data egress in Snowflake?

Data egress refers to sending data out of Snowflake to another region or cloud platform, for which Snowflake charges a fee.

Does Snowflake charge for data egress?

Yes, there are fees associated with data egress in Snowflake. Sending data from Snowflake to another region or cloud platform incurs a certain fee.

Will I incur data transfer costs if I move data within the same region?

No, there are no data transfer costs when moving data within the same region in Snowflake.

Can I dispute an unexpected spike in charges?

Yes, you can contact Snowflake support to review and dispute any unexpected data transfer charges on your Snowflake bill.

What Snowflake feature triggers data transfer costs for unloading data?

The COPY INTO <location> command used for unloading data from Snowflake to an external storage location can trigger data transfer costs.

How can you replicate data in Snowflake?

You can replicate data in Snowflake by copying data from one Snowflake account to another account hosted on a different platform or region.

What are external functions in Snowflake?

External functions in Snowflake are user-defined functions that are executed outside of Snowflake, allowing access to external APIs, services, and custom code.

How to estimate Snowflake data transfer costs?

You can estimate Snowflake data transfer costs by using Snowsight or querying the ACCOUNT_USAGE and ORGANIZATION_USAGE schemas.

Who has access to view Snowflake cost and usage data by default?

By default, only the account administrator (a user with the ACCOUNTADMIN role) has access to view Snowflake cost and usage data.

How can you view the overall Snowflake data transfer cost using Snowsight?

In Snowsight, navigate to the "Admin" section, select "Usage", and choose "All Usage Types" from the drop-down list.

What are the two schemas in Snowflake for querying data transfer costs?

The two schemas in Snowflake for querying data transfer costs are ORGANIZATION_USAGE and ACCOUNT_USAGE.

What Snowflake view can be used to view data transfer costs in currency?

The USAGE_IN_CURRENCY_DAILY view can be used to view data transfer costs in currency.

How can you reduce unnecessary Snowflake data transfer?

You can reduce unnecessary Snowflake data transfer by minimizing data duplication and consolidating/merging datasets where possible.

What Snowflake features can help reduce data transfer costs?

Snowflake's built-in data compression and encryption features can help reduce data transfer costs.

How can Snowpipe help optimize Snowflake data transfer costs?

Snowpipe can help optimize Snowflake data transfer costs by seamlessly loading streaming data into your Snowflake account without the need for preprocessing, and by utilizing Snowflake's auto-scaling capabilities.

Why is monitoring data transfer costs and usage important?

Monitoring data transfer costs and usage is important for optimizing performance and managing your budget effectively.

How much does Snowflake charge per TB of data storage?

The charges for data storage would depend on the pricing plans and agreements with the specific cloud provider (AWS, Azure, or GCP) where the Snowflake account is hosted. See Snowflake storage costs.