AWS EMR (Elastic MapReduce) is a fully managed big data platform. It manages the setup, configuration, and tuning of open source frameworks like Apache Hadoop, Apache Spark, Apache Hive, Presto, and more at scale on AWS infrastructure. EMR handles cluster scaling, resource allocation, and lifecycle management. This allows you to work with large datasets for various use cases, from ETL pipelines to ML workloads. EMR uses a pay-as-you-go pricing model. Costs for compute, storage, and other AWS services can add up quickly as your data grows, clusters get bigger, and jobs become more complex. If you're not careful, costs can skyrocket due to inefficient resource use, poor instance choices, and misconfigured storage. That's why AWS EMR Cost Optimization is key. It helps you get the best performance per dollar while maintaining data processing speed, reliability, and scalability.

In this article, we will cover 15 practical AWS EMR cost optimization tips to slash your EMR spending, from managing resources, optimizing storage, selecting the right instances, to developing effective monitoring strategies—and a whole lot more.

Let's dive right in!

Table of Contents

Don’t have time for the full read? Skip ahead to the section you want.

15 Actionable AWS EMR Cost Optimization Tips

🔮 AWS EMR Cost Optimization Tip 1—Use AWS EMR Spot Instances Whenever Possible

Spot instances are spare AWS EC2 capacity sold at steep discounts. On EMR clusters, you can often pay up to ~40 – 90% less than EC2 On-Demand prices by using Spot nodes. The catch is that Spot instances can be reclaimed with a two-minute warning, but many big data workloads are fault-tolerant: Spark and Hadoop can retry tasks if an executor disappears. EMR’s instance fleets let you mix several instance types and AZs, so if one Spot pool ends, EMR can launch another type automatically.

The suitability of Spot Instances depends on your workload's characteristics. Batch jobs that run overnight? Perfect for Spot. Interactive queries that need immediate results? Maybe not so much. But even interactive workloads can benefit from a hybrid approach.

Here is how to implement:

- Start with task/worker nodes on Spot Instances

- Keep master nodes on On-Demand for stability

- Utilize multiple instance types within an instance fleet to enhance Spot availability

- Enable automatic bid management with capacity-optimized allocation

In reality, using Spot for task nodes can drastically cut costs. Just make sure critical services, particularly the master node and HDFS NameNode (typically found on core nodes), stay on On-Demand or Reserved capacity, or use a mix (see next tip).

🔮 AWS EMR Cost Optimization Tip 2—Mix On-Demand and Spot for Reliability

Why put all your eggs in one fragile basket? Combining Spot and On-Demand instances in the same cluster adds reliability. Spot instances are cheap, while EC2 On-Demand instances offer stability. A smart approach is to use master and core nodes on On-Demand or Reserved Instances, and task nodes on Spot. This way, your cluster remains operational even if some Spot workers disappear.

To keep your HDFS core nodes safe, stick with On-Demand instances and use Spot executors to scale out. You can gradually adjust the Spot ratio to find the sweet spot: too much Spot and jobs stall when interruptions occur; too little Spot and you miss out on savings. Use EMR Instance Fleets to specify a percentage of Spot vs On-Demand capacity. EMR will attempt to meet that mix, and if a Spot is interrupted, it can replenish with another instance type in the fleet.

In short:

- Master node — Always On-Demand (super important for keeping the cluster stable)

- Core nodes — 2-3 On-Demand instances for HDFS reliability

- Task nodes — 80-90% Spot Instances for those heavy compute tasks

Tip: Use Spot aggressively, but don’t rely on it 100%. Keeping the master node On-Demand is a common practice to ensure cluster stability even when task nodes are Spot.

Check out the article below to learn how to configure EMR clusters with EC2 Spot instances.

🔮 AWS EMR Cost Optimization Tip 3—Enable EMR Managed Scaling

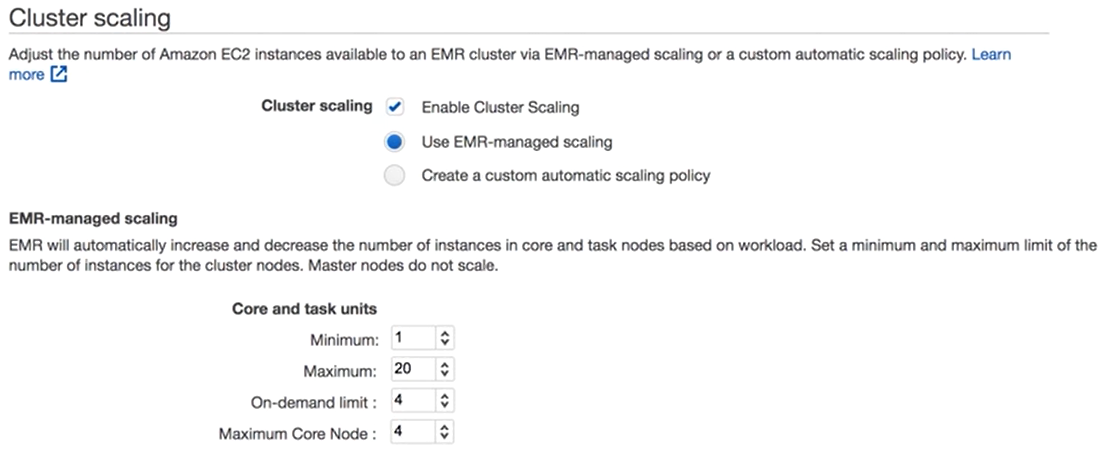

Let AWS EMR adjust and handle your cluster size. EMR’s Managed Scaling feature can automatically add or remove EMR nodes based on workload metrics. Managed scaling allows you to automatically increase or decrease the number of instances or units in your cluster based on workload. AWS EMR continuously monitors cluster metrics, making intelligent scaling decisions to optimize for both cost efficiency and processing speed. Managed Scaling supports clusters configured with either instance groups or instance fleets.

When to Use EMR Managed Scaling?

EMR Managed Scaling is especially valuable for clusters with:

- Variable or Fluctuating Demand — If your cluster experiences fluctuating workloads or extended periods of low activity, Managed Scaling can automatically reduce resources, minimizing costs without manual intervention.

- Unpredictable or Bursty Workloads — For clusters with dynamic or unpredictable usage patterns, Managed Scaling adjusts capacity in real time to meet changing processing requirements.

- Multiple Jobs at Once — When running multiple jobs simultaneously, Managed Scaling allocates resources as needed to match workload intensity, preventing resource bottlenecks and maximizing cluster utilization.

Tip: Managed Scaling isn't usually a good fit for clusters with steady, consistent workloads where resource use stays stable and predictable. In these cases, manual scaling or fixed provisioning might be more suitable and economical.

Here is how Managed Scaling works

EMR Managed Scaling leverages high-resolution metrics, collected at one-minute intervals, to make informed scaling decisions.

The EMR Managed Scaling algorithm continuously analyzes these high-resolution metrics to identify under- or over-utilization. Using this data, it estimates how many YARN containers can be scheduled per node. If the cluster is running low on memory and applications are pending, Managed Scaling will automatically provision additional EMR nodes.

🔮 AWS EMR Cost Optimization Tip 4—Right-Size Your Initial Cluster (Start Small, Then Scale)

Starting with oversized clusters is one of the most expensive mistakes you can make. It's tempting to throw hardware at performance problems, but EMR clusters have diminishing returns beyond a certain point.

The optimal approach is to start small and scale based on actual performance metrics. A single r5.xlarge instance can process surprising amounts of data, especially with proper optimization.

Sizing technique:

- Start with 2-3 EMR nodes for proof of concept

- Run representative workloads and measure bottlenecks

- Scale horizontally (more nodes) for I/O-bound jobs

- Scale vertically (bigger instances) for memory-bound operations

- Use CloudWatch metrics to identify actual constraints

Common Mistakes to Avoid:

- Selecting large instances for small datasets

- Adding EMR nodes when the actual bottleneck lies in network or storage

- Using compute-optimized instances for memory-intensive workloads

- Provisioning based on peak load without considering actual usage patterns

Instance Selection Framework (Starting Points):

- For Spark SQL queries — Memory-optimized instances (r5 family)

- For machine learning — Compute-optimized instances (c5 family)

- For streaming workloads — General-purpose instances (m5 family)

- For mixed workloads — Start with general-purpose, then specialize

🔮 AWS EMR Cost Optimization Tip 5—Auto-Terminate Idle EMR Clusters

An idle EMR cluster is a money pit. A single forgotten m5.4xlarge cluster costs ~$350 - 500+ monthly, even when doing absolutely nothing. Multiply this by a few dozen clusters across different teams, and you're looking at thousands in waste.

EMR provides several termination options, but the most effective is auto-termination combined with idle timeout settings. This automatically shuts down clusters when they're not actively processing jobs.

Auto-termination strategies:

- Idle timeout — Terminate after X minutes of no active jobs

- Step-based termination — Shut down the cluster once all defined steps or jobs are completed

- Time-based termination — Terminate clusters after a specific scheduled duration, regardless of activity

- Custom logic — Use Lambda functions for complex termination rules

Setup Essentials:

- Only turn on termination protection for production clusters

- Choose a decent idle timeout (10-30 minutes usually works)

- Use CloudWatch Events to keep an eye on cluster states

- Automate cluster restarts when they're needed

To enable auto-termination, select the "auto-terminate" checkbox during cluster creation and verify its activation, especially for testing environments.

Important Note: Do not rely solely on YARN job counts to determine idleness. A cluster might appear busy due to HDFS maintenance or system processes. Monitor CPU utilization, network I/O, and disk activity to accurately identify true idleness.

Tip: Implement cluster tagging standards so teams know which clusters are shared vs personal development environments. Shared clusters might need longer idle timeouts, while personal clusters should terminate aggressively.

🔮 AWS EMR Cost Optimization Tip 6—Share and Reuse Clusters

Reuse clusters when you can, rather than spinning up a new one for each job. Launching a new cluster takes some time (it takes a few minutes for EMR to boot up) and can waste resources while it waits for work to do. If you've got regularly scheduled jobs or interactive workloads, think about running a long-running EMR cluster that multiple jobs or users can share. You can serialize or queue jobs using YARN, Step Functions, Airflow, or AWS Glue Workflows. (This way you pay the cluster hourly only once, rather than spinning up many one-off clusters.).

If you’re on Kubernetes (EKS) already, EMR on EKS can help share resources efficiently. Unlike YARN on EMR, where only one app can fully utilize the master and some idle resources go unused, EMR on EKS allows multiple Spark jobs to share the same EMR runtime on a single AWS EKS cluster.

In short, if concurrency is possible, using fewer, larger clusters (or a shared AWS EKS cluster) often costs less than many short-lived ones. You still pay for the uptime, but you avoid bootstrapping costs and wasted idle nodes.

🔮 AWS EMR Cost Optimization Tip 7—Use AWS EC2 Reserved Instances or Savings Plans

If you know you'll always need a certain amount of EMR capacity, lock in with Reserved Instances (RIs) or Savings Plans for discounts. Both Reserved Instances and Savings Plans reduce the AWS EC2 (compute) cost under an EMR cluster. A 3-year AWS EC2 Reserved Instance, for instance, can cut your costs by as much as ~72% compared to On-Demand. Here's what happens with Reserved Instances (RIs): EMR simply consumes matching reservations first. If you have, say, one m5.xlarge Reserved Instance purchased in us-east-1, and you launch an EMR cluster with two m5.xlarge nodes, the first node uses the RI rate and the second is billed On-Demand.

AWS Savings Plans are super flexible. You can choose a Compute Savings Plan, which goes up to ~66% off and can be used with any AWS EC2 instance family and region, as long as you meet a minimum spend requirement. Or an EC2 Instance Savings Plan offers up to 72% off, but it's limited to one instance family in a single region. The good news is that both types of plans work with EMR clusters, since EMR relies on AWS EC2 behind the scenes. If your EMR workload is steady and predictable, consider buying enough Savings Plans or Reserved Instances to cover your core nodes (or your entire average cluster) and you can save significantly. Just keep in mind that these discounts only apply to the EC2 part of the bill; you'll still have to pay for the EMR service fee and any EBS/storage costs separately.

Important Note: These discounts cover the AWS EC2 part; the EMR service fee and any EBS/storage costs are separate charges.

Tip: Reserved Instances (RIs) are not cheap upfront, and they tie you to specific instance types. But for steady baselines, they make sense. You don’t have to plan every core and task node, though – even reserving a portion of your cluster (say 25% to 50% of capacity) and using Spot for the rest can yield big savings.

🔮 AWS EMR Cost Optimization Tip 8—Pick the Right Instance Types

Not all AWS EC2 instance types are equally suited for every job. Choosing the right EC2 family instance (CPU-optimized, memory-optimized, etc.) types avoids waste. EMR supports most families (M, C, R, I, etc.), so pick based on needs:

- General-purpose workloads — use M-series instances (M5, M6g). They offer a balanced starting point.

- Compute-bound jobs (CPU-heavy Spark/Hadoop tasks) — use C-series (C5, C6i, etc.).

- Memory-heavy jobs (large Hive queries or HBase) — use R-series (R5, R6g).

- Storage/HDFS-heavy (lots of disk I/O or HDFS data) — consider I3/I4i (NVMe SSD) or D-series.

Also, new-generation instances tend to have better price-to-performance. ARM-based Graviton instances (like M6g, C6g, R6g), for instance, often cost less per vCPU than their x86 counterparts because AWS owns the silicon.

As a general approach, run a benchmark of one of your jobs on different instance types. Monitor metrics such as CPU utilization and cost. If a smaller, cheaper instance sees 100% CPU usage and slower job times, try moving one size up. Sometimes, using a slightly bigger instance at 50% utilization costs less overall because the job finishes faster. Balance these factors: start small and scale based on job demands, which includes trying different EMR instance sizes.

🔮 AWS EMR Cost Optimization Tip 9—Go Big – Larger Instances Can Lower EMR Fees

Paradoxically, sometimes a bigger instance size can lower costs. EMR (and AWS generally) charges by total compute‑seconds, so 50 small EMR nodes for two hours costs the same as 100 small nodes for one hour (100 node‑hours). If your workload runs faster on more nodes, do it – your bill may stay the same or even drop if larger nodes run more efficiently.

Large instances often have a lower per‑vCPU rate. For example, two 8‑vCPU instances can cost less than four 4‑vCPU instances, since EMR pricing does not scale linearly with CPU count. Bigger nodes also reduce network hops between data and CPU, cutting shuffle time. Keep your core nodes fixed (often larger) and scale only task nodes, as larger cores hold more data reliably.

There are trade-offs to consider: larger nodes mean fewer executors and less granularity. For moderately sized jobs or multi‑tenant clusters, the overhead savings usually outweigh that. You can test this by launching two clusters (one with N large nodes and one with many small nodes) and comparing runtime and cost. If the large‑node cluster is cheaper, stick with it. At minimum, avoid defaulting to the smallest available machines; instead, experiment with larger sizes within an instance family to see if they reduce your overall bill.

🔮 AWS EMR Cost Optimization Tip 10—Optimize Data Formats and Storage

How you store data on S3/HDFS has a big cost/performance impact. Use columnar formats and compression: convert CSV/JSON logs to Parquet or ORC with a compression codec like Snappy, GZIP, LZ4 or zstd (Zstandard). Columnar formats drastically cut the bytes scanned by analytics engines.

Partition your data to skip unnecessary reads. For time-series data, store by date (year/month/day folders). Spark and Hive will only read the relevant partitions, avoiding full-table scans. Avoid over-partitioning (aim for files > 128MB per partition). And also make sure to consider bucketing for joins and aggregations

Also use data lifecycle policies on S3. Move older, infrequently accessed data to cheaper tiers.

- Hot data — S3 Standard for frequently accessed data

- Warm data — S3 Standard-IA for monthly access patterns

- Cold data — S3 Glacier for archival with retrieval flexibility

- Deep archive — S3 Glacier Deep Archive for compliance/backup

For temporary or shuffle storage, use cheaper EBS (gp3 instead of gp2) or even an instance store if possible. Every bit of data efficiency trims AWS EC2 runtime and storage bills.

In short, store data in the right format and storage tier. This will cut both S3 bills and EMR compute time.

🔮 AWS EMR Cost Optimization Tip 11—Use AWS S3 Storage Classes and Lifecycle Policies

Big data is often stored on AWS S3, and using the right tier can save you a pretty penny. For data you access all the time, like hot logs or tables, standard AWS S3 works just fine. But for older data that you don't access as often, there are cheaper options. AWS’s S3 Intelligent-Tiering moves your files between "frequent" and "infrequent" tiers. It does this based on how often you access them. This way, you won’t pay more for rarely used files. If you have data mainly for archiving, like audit records, S3 Glacier or Glacier Deep Archive is a great choice. It costs only a few cents per TB-month.

To use these classes:

- Enable AWS S3 Intelligent-Tiering on buckets where access patterns vary.

- Set up AWS S3 lifecycle rules: e.g. transition objects older than 30 days to Infrequent Access, and objects older than 1 year to Glacier.

These moves can cut your storage bill by 30-70% depending on how you use it. If you've got data that you rarely need... AWS S3 Glacier is a super cost-effective storage solution. The catch is, it takes longer to get your data back or you pay more per GB to retrieve it, so it's best for archival data or backups. But being smart about storage tiers means your EMR work - which usually reads from S3 - gets the data it needs from a cost-effective spot, not a full-price one that's just wasting storage on old logs.

🔮 AWS EMR Cost Optimization Tip 12—Tag Everything and Use Cost Explorer/Budgets

You can’t manage what you don’t measure. Cutting costs also means tracking them. Tag all EMR clusters, AWS EC2 instances, EBS volumes, and S3 buckets with meaningful keys (for example, project, team, env) to track usage. Then use AWS Cost Explorer or the Cost and Usage Report to break down spending by tag. With good tags, you can see exactly who spent what on EMR. Here are some essential EMR tags:

- Team/Department — Who owns this cluster?

- Project — Which business initiative is this supporting?

- Environment — Development, staging, or production?

- Owner — Who to contact for questions or issues?

- Scheduled-Termination — When should this cluster die?

For example, tag clusters as Project=DataLake and then filter Cost Explorer by that tag to see exactly how much that project spent.

Also, beyond tags, set budgets and alerts. Use AWS Budgets to monitor your overall EMR spend (or even separate budgets for AWS EC2 vs EMR service fees). You can set thresholds (say, 50%, 80%, 100% of the monthly forecast) and notify your team by email or Slack if those thresholds are exceeded. The idea is to catch anomalies early. Review Cost Explorer charts weekly or monthly. Look for surprising spikes (maybe a runaway cluster) and drill in. The combination of tags + Cost Explorer gives full visibility into which pipelines or teams drive EMR spend.

TL;DR: tagging + monitoring tools = cost visibility, which is the first step to cost savings. You can’t fix what you don’t measure.

🔮 AWS EMR Cost Optimization Tip 13—Use Resources Wisely (Tune Spark/YARN Configs)

Tweaking and fine-tuning your Spark and YARN configs can help you max out your cluster. First off, take a look at container sizing: by default, EMR allocates a set amount of memory and CPU to each YARN container, based on the instance type you're using. If your jobs never use all that memory, you’re wasting capacity. Run:

yarn node -list -showDetails Or use CloudWatch to see actual memory and CPU usage. Then adjust these Spark parameters:

spark.executor.memory— balance parallelism and memory per taskspark.executor.cores— generally aim for 4-6 cores per executor for optimal performance

Also adjust memory overhead accordingly. Say, if you allocate 4 GB to each executor but only use 2 GB, cut it to 2.5 GB to allow more executors per node. EMR Observability (or Spark UI) helps here: monitor the fraction of allocated RAM actually in use.

Enable Spark dynamic features - like Adaptive Query Execution (AQE) and Dynamic Partition Pruning are on by default in newer EMR releases. AQE will, for instance, coalesce small shuffle partitions into larger ones. This way, you avoid a ton of tiny tasks. Dynamic partition pruning skips irrelevant data when joining with a small table. Both of these features reduce shuffle size and I/O. Likewise, set spark.sql.shuffle.partitions to a reasonable number for your cluster size; too high means many idle tasks.

On YARN, use fair or capacity scheduling so multiple jobs can share resources well. And disable Spark speculative execution unless you have a lot of straggling tasks – it can double work for slight speed gain, hurting cost. Finally, if using Tez (Hive) or plain MR, tune mapreduce.map.memory.mb and reduce.memory settings so containers fit real job needs. Properly sizing containers to actual workload is key to high utilization. In short, right-sizing executors and using adaptive query features ensure that your cluster runs lean, cutting unnecessary waiting and idle resources.

You can also use AWS Glue or Spark's SQL UI to get a closer look at your queries. Simple tweaks like filtering out unnecessary columns can make a big difference and speed up your EMR jobs. Keep an eye on CloudWatch for any signs of waste too. Key metrics like YARN memory and HDFS usage are a good place to start - they'll help you figure out if you need to resize or reconfigure. This tip is more process than magic bullet, but making your jobs leaner directly translates into lower runtime and cost.

🔮 AWS EMR Cost Optimization Tip 14—Choose the Right EMR Deployment Option (EMR on EC2, EMR on EKS, or AWS EMR Serverless)

AWS has three EMR deployment options. Each option has different costs and use cases. Pick the wrong one, and your costs may rise sharply or force you into architectural constraints.

You provision EC2 instances plus EMR service. You pay the EMR per-instance fee plus the EC2/EBS costs. This is flexible (you control all settings) but means you pay for any idle time the cluster is up.

2) EMR on EKS

If you already use Kubernetes, you can run EMR workloads on an existing AWS EKS cluster. You pay EMR’s per‑application charge plus AWS EC2/EBS (or Fargate) costs for Spark executors. EMR on EKS lets multiple apps share nodes, improving utilization. You can also use Spot for executors (keeping the driver on On-Demand for stability), gaining up to ~90% savings on those spots. Choose this option for containerized deployment or multi‑tenant sharing; it can reduce idle master costs since Kubernetes masters are shared.

AWS manages compute for you. You create “serverless applications” and submit jobs without provisioning AWS EC2 clusters. There is no cluster‑uptime charge; you pay only for the vCPU, memory, and temporary storage your job consumes, billed per second (rounded to one minute). Serverless suits short or unpredictable jobs because you only pay for the exact resources consumed. In contrast, very long-running heavy jobs may sometimes cost more on Serverless than on a well-utilized fixed cluster (since on EC2, you can apply RIs/Savings Plans). AWS EMR Serverless has no upfront costs and bills only on consumed resources.

When to use each option

- Use EMR on EC2 for stable, high-throughput jobs

- Use AWS EMR Serverless for ad-hoc Spark/Hive queries or bursty workloads

- Use EMR on EKS if you want Kubernetes integration

| EMR Variant | Description | Best for | Cost Model | Pros | Cons |

| (EMR on EC2) Standard EMR | Runs directly on EC2 instances, full cluster control | Long-running, predictable workloads | Pay for underlying EC2 instances + EMR charges | Full control, all EMR features, extensive customization | Requires manual cluster management, slower startup times |

| EMR on EKS | Runs on Amazon Elastic Kubernetes Service (EKS), leveraging existing EC2 instances | Containerized environments, job isolation, Kubernetes-native operations | EKS cluster fees + EC2 instances + EMR job charges | Better resource sharing, managed experience | Requires Kubernetes expertise |

| AWS EMR Serverless | Serverless option, no cluster management required | Sporadic workloads, event-driven processing, minimal ops overhead | Pay per vCPU-hour and GB-hour consumed | No cluster setup, charges only for resources used | Limited to specific use cases, minimum 1-minute charge |

- Utilization < 30% — AWS EMR Serverless likely cheaper

- Utilization > 70% — Standard EMR with RIs/Savings Plans

- Mixed workloads — EMR on EKS for better resource sharing

- Simple batch jobs — AWS EMR Serverless for operational simplicity

🔮 AWS EMR Cost Optimization Tip 15—Monitor and Review Regularly

AWS EMR cost optimization is a continuous process, not a one-time fix.

Set up dashboards and schedule regular reviews. Use AWS Cost Explorer to track your EMR spending, and enable AWS Budgets to alert you when you exceed your targets.

Try simple things like tagging old clusters to see how long they’ve been running. Shut down clusters that remain idle. Compare the instance types you used this month versus last month. Consider migrating to newer instance types or adjusting sizes to save money.

Monitor CloudWatch and EMR metrics. Track IsIdle to spot idle clusters, HDFSUtilization to detect storage bottlenecks, and ContainerPendingRatio to identify jobs waiting for resources. These metrics will guide you when resizing or reconfiguring your clusters.

Review Spark logs and the application history to uncover inefficient queries, such as skewed joins or Cartesian products. Check S3 and EBS metrics: high GET/PUT rates or low EBS burst credits indicate elevated I/O costs.

Treat your EMR clusters like assets. Perform weekly or monthly audits. Clean up unused resources and adjust settings as your workloads change.

Turn off debug logging in production. Implement scheduled shutdowns and scaling policies to capture savings of tens of percent each month. Review your bill and usage graphs regularly to catch small issues before they grow.

After each monthly invoice, analyze EMR spend by job, team, or cluster. Ask why costs were high and whether they were justified. Could you have used Spot instances or a smaller cluster?

Repeat this cycle. Each time you find an inefficiency, apply one of these tips. Over time, you’ll uncover more savings.

🔮 Bonus EMR Cost Optimization Tip—Leverage FinOps Tools like Chaos Genius

Last but not least, think about using FinOps and cost-management tools to automate insights. AWS has some native options like Cost Explorer, AWS Budgets, and Trusted Advisor. You can also go with specialized FinOps platforms like ours, Chaos Genius. We use AI‑driven autonomous agents to monitor data workloads. Right now we support Snowflake and Databricks, and we’ll add AWS EMR very soon. Want to see it in action? Join the waitlist now and take it for a spin 👇.

Conclusion

And that’s a wrap! AWS EMR is really strong, but charges might sneak up on you if you don't pay attention. The tips above - from smart purchasing to data and cluster tuning - cover all the bases. Start with the easy stuff (spot instances, idle shutdowns, right-sizing), and then move on to things like data formatting, tagging, and long-term commitments. Continue monitoring and iterating. With strong and disciplined FinOps practices, you can often slash your AWS EMR costs by ~20-50% or more without hurting performance. It's not a one-time fix; make it part of your regular data pipeline routine. Stay up to date on new AWS features and pricing changes too. Over time, your EMR clusters will become more efficient, your team will budget better, and you'll still have the necessary data processing power you need.

In this article, we have covered:

- 15 AWS AWS EMR Cost Optimization Tips to Slash Your EMR Spending

- 🔮 EMR Cost Optimization Tip 1—Use AWS EMR Spot Instances Whenever Possible

- 🔮 EMR Cost Optimization Tip 2—Mix On-Demand and Spot for Reliability

- 🔮 EMR Cost Optimization Tip 3—Enable EMR Managed Scaling

- 🔮 EMR Cost Optimization Tip 4—Right-Size Your Initial Cluster (Start Small, Then Scale)

- 🔮 EMR Cost Optimization Tip 5—Auto-Terminate Idle EMR Clusters

- 🔮 EMR Cost Optimization Tip 6—Share and Reuse Clusters

- 🔮 EMR Cost Optimization Tip 7—Use EC2 Reserved Instances or Savings Plans

- 🔮 EMR Cost Optimization Tip 8—Pick the Right Instance Types

- 🔮 EMR Cost Optimization Tip 9—Go Big – Larger Instances Can Lower EMR Fees

- 🔮 EMR Cost Optimization Tip 10—Optimize Data Formats and Storage

- 🔮 EMR Cost Optimization Tip 11—Use AWS S3 Storage Classes and Lifecycle Policies

- 🔮 EMR Cost Optimization Tip 12—Tag Everything and Use Cost Explorer/Budgets

- 🔮 EMR Cost Optimization Tip 13—Use Resources Wisely (Tune Spark/YARN Configs)

- 🔮 EMR Cost Optimization Tip 14—Choose the Right EMR Deployment Option (EMR on EC2, EMR on EKS, or AWS EMR Serverless)

- 🔮 EMR Cost Optimization Tip 15—Monitor and Review Regularly

- 🔮 EMR Cost Optimization Bonus Tip—Leverage FinOps Tools like Chaos Genius

… and so much more!

FAQs

What are the primary cost drivers in AWS EMR?

AWS EMR bills break down into:

- AWS EC2 compute hours (vCPU‑hour and memory‑hour rates)

- EMR service fee (per‑instance‑hour surcharge on top of EC2)

- EBS volume charges for attached storage

- S3 storage and request fees when reading or writing data

- Data transfer costs (between AZs, regions, or out to the internet)

- CloudWatch logging/metrics if you push verbose logs or high‑resolution metrics

Compute usually dominates, but storage and network can add up on heavy workloads

What are Spot Instances, and how do they reduce AWS EMR costs?

Spot Instances let you bid on unused AWS EC2 capacity at steep discounts (often 40–90% cheaper than On‑Demand). EMR integrates Spot for task nodes or fleets; if AWS EC2 reclaims a Spot node, Spark/YARN retries fail to work transparently. That slashes your compute spend while preserving fault tolerance.

What's the single most effective AWS EMR Cost Optimization technique?

Using Spot instances for appropriate workloads provides the biggest immediate cost reduction (up to 90% savings) compared to EC2 On-Demand instances. But combining Spot with auto-termination and rightsizing typically provides the best overall impact.

How do I know if my EMR clusters are candidates for Spot instances?

Fault-tolerant batch jobs, ETL processes, and analytics workloads are ideal for Spot. Interactive queries and real-time streaming applications may need mixed EC2 On-Demand/Spot configurations. Test with your actual workloads to determine suitability.

How does EMR Managed Scaling differ from custom auto‑scaling policies?

Managed Scaling uses an EMR‑built algorithm that samples workload metrics every minute and adjusts cluster size within your min/max limits. It works with both instance groups and fleets and lives at the cluster level. On the other hand, Custom Auto‑Scaling relies on your CloudWatch metrics and scaling rules defined per instance group. You control evaluation periods, cooldowns, thresholds, and exact scaling actions

How often should I review and optimize AWS EMR costs?

Conduct basic reviews monthly and comprehensive optimization quarterly. Set up automated monitoring to catch anomalies immediately.

Is it better to run many small instances or fewer large instances?

Fewer large nodes can cut EMR service fees, since you pay the per-node fee less often. Many small instances give finer parallelism but incur higher aggregate node fees. Benchmark both setups: compare runtime × cost to find your sweet spot.

Should I use AWS EMR Serverless for all new workloads?

AWS EMR Serverless is cost-effective for sporadic workloads with low utilization (< 30%). For consistent, high-utilization workloads, traditional EMR with Reserved Instances is usually cheaper.

What's the biggest AWS EMR Cost Optimization mistake organizations make?

Running clusters at fixed capacity without auto-scaling or auto-termination. Many organizations provision for peak load and forget to scale down, resulting in massive waste during off-peak periods.

What monitoring tools do I need for effective AWS EMR Cost Optimization?

Start with AWS Cost Explorer and CloudWatch for basic monitoring. Add specialized FinOps tools like Chaos Genius for advanced optimization as your usage scales.

Does EMR pricing include S3 or only AWS EC2?

EMR pricing covers AWS EC2 compute and EBS volumes plus the EMR service fee. S3 storage, requests, and data transfer incur separate standard S3 rates.

How do AWS Cost Explorer/Budgets help in managing AWS EMR costs?

Cost Explorer breaks down spend by service, account, tag, or cluster. You can spot unexpected spikes in AWS EC2 vs EMR fees. On the other hand, AWS Budgets lets you set cost or usage thresholds (for EMR, EC2, S3, Savings Plans) and sends alerts via email or SNS when you hit defined limits.

How do I calculate ROI for EMR optimization initiatives?

Track monthly AWS EMR costs before and after optimization, factor in implementation time and any performance impacts, then calculate savings over 12 months.